使用Qdrant和DeepSeek在5分钟内搭建RAG

| 时间:5 分钟 | 级别:新手 | 输出:GitHub |

|---|

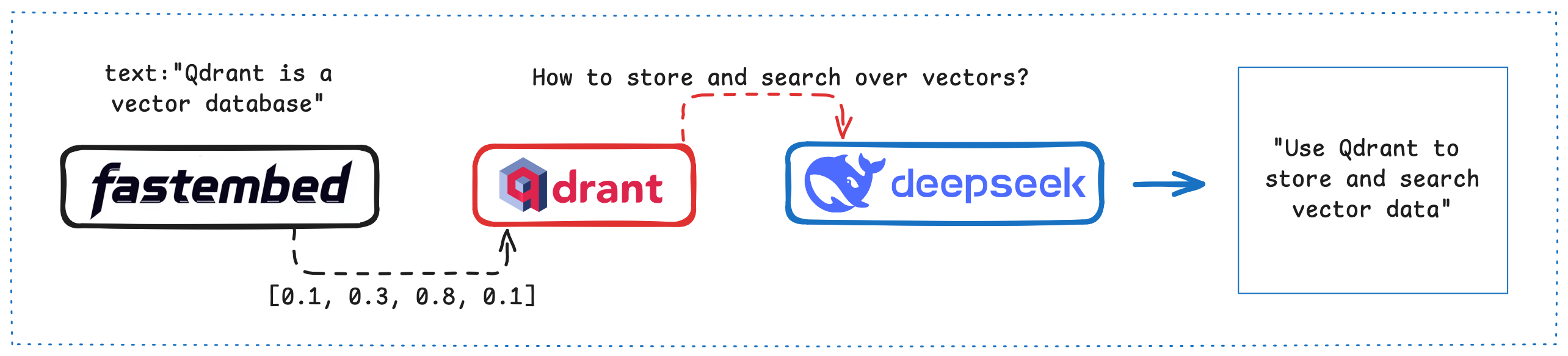

本教程演示了如何使用 Qdrant 作为向量存储解决方案,并利用 DeepSeek 进行语义查询丰富,来构建一个检索增强生成 (RAG) 管道。RAG 管道通过提供上下文相关数据来增强大型语言模型 (LLM) 的响应。

概述

在本教程中,我们将

- 使用 FastEmbed 将示例文本转换为向量。

- 将向量发送到 Qdrant 集合。

- 将 Qdrant 和 DeepSeek 连接成一个最小的 RAG 管道。

- 向 DeepSeek 提出不同的问题并测试答案准确性。

- 使用从 Qdrant 检索到的内容丰富 DeepSeek 提示。

- 评估之前和之后的答案准确性。

架构

先决条件

确保您已具备以下条件

- Python 环境 (3.9+)

- 访问 Qdrant Cloud

- 来自 DeepSeek 平台的 DeepSeek API 密钥

设置 Qdrant

pip install "qdrant-client[fastembed]>=1.14.1"

Qdrant 将充当知识库,为我们将发送给 LLM 的提示提供上下文信息。

您可以在 http://cloud.qdrant.io 获取一个永久免费的 Qdrant 云实例。请参阅快速入门了解如何设置实例。

QDRANT_URL = "https://xyz-example.eu-central.aws.cloud.qdrant.io:6333"

QDRANT_API_KEY = "<your-api-key>"

实例化 Qdrant 客户端

from qdrant_client import QdrantClient, models

client = QdrantClient(url=QDRANT_URL, api_key=QDRANT_API_KEY)

构建知识库

Qdrant 将使用我们事实的向量嵌入来丰富原始提示的上下文。因此,我们需要存储向量嵌入以及用于生成它们的事实。

我们将通过 FastEmbed 使用 bge-base-en-v1.5 模型 - 一个轻量、快速的 Python 嵌入生成库。

Qdrant 客户端提供了与 FastEmbed 的便捷集成,使得构建知识库非常简单。

首先,我们需要创建一个集合,这样 Qdrant 就能知道它将处理哪些向量,然后,我们只需将封装在 models.Document 中的原始文档传递进去,即可计算并上传嵌入。

collection_name = "knowledge_base"

model_name = "BAAI/bge-small-en-v1.5"

client.create_collection(

collection_name=collection_name,

vectors_config=models.VectorParams(size=384, distance=models.Distance.COSINE)

)

documents = [

"Qdrant is a vector database & vector similarity search engine. It deploys as an API service providing search for the nearest high-dimensional vectors. With Qdrant, embeddings or neural network encoders can be turned into full-fledged applications for matching, searching, recommending, and much more!",

"Docker helps developers build, share, and run applications anywhere — without tedious environment configuration or management.",

"PyTorch is a machine learning framework based on the Torch library, used for applications such as computer vision and natural language processing.",

"MySQL is an open-source relational database management system (RDBMS). A relational database organizes data into one or more data tables in which data may be related to each other; these relations help structure the data. SQL is a language that programmers use to create, modify and extract data from the relational database, as well as control user access to the database.",

"NGINX is a free, open-source, high-performance HTTP server and reverse proxy, as well as an IMAP/POP3 proxy server. NGINX is known for its high performance, stability, rich feature set, simple configuration, and low resource consumption.",

"FastAPI is a modern, fast (high-performance), web framework for building APIs with Python 3.7+ based on standard Python type hints.",

"SentenceTransformers is a Python framework for state-of-the-art sentence, text and image embeddings. You can use this framework to compute sentence / text embeddings for more than 100 languages. These embeddings can then be compared e.g. with cosine-similarity to find sentences with a similar meaning. This can be useful for semantic textual similar, semantic search, or paraphrase mining.",

"The cron command-line utility is a job scheduler on Unix-like operating systems. Users who set up and maintain software environments use cron to schedule jobs (commands or shell scripts), also known as cron jobs, to run periodically at fixed times, dates, or intervals.",

]

client.upsert(

collection_name=collection_name,

points=[

models.PointStruct(

id=idx,

vector=models.Document(text=document, model=model_name),

payload={"document": document},

)

for idx, document in enumerate(documents)

],

)

设置 DeepSeek

RAG 改变了我们与大型语言模型的交互方式。我们将一个模型可能创建反事实答案的知识导向型任务,转换为一个语言导向型任务。后者期望模型提取有意义的信息并生成答案。正确实施的 LLM 应该执行语言导向型任务。

该任务始于用户发送的原始提示。然后将相同的提示向量化,并用作搜索最相关事实的查询。这些事实与原始提示结合,构建一个包含更多信息的更长提示。

但让我们先简单地直接提问。

prompt = """

What tools should I need to use to build a web service using vector embeddings for search?

"""

使用 Deepseek API 需要提供 API 密钥。您可以从 DeepSeek 平台获取。

现在我们终于可以调用补全 API 了。

import requests

import json

# Fill the environmental variable with your own Deepseek API key

# See: https://platform.deepseek.com/api_keys

API_KEY = "<YOUR_DEEPSEEK_KEY>"

HEADERS = {

"Authorization": f"Bearer {API_KEY}",

"Content-Type": "application/json",

}

def query_deepseek(prompt):

data = {

"model": "deepseek-chat",

"messages": [{"role": "user", "content": prompt}],

"stream": False,

}

response = requests.post(

"https://api.deepseek.com/chat/completions", headers=HEADERS, data=json.dumps(data)

)

if response.ok:

result = response.json()

return result["choices"][0]["message"]["content"]

else:

raise Exception(f"Error {response.status_code}: {response.text}")

以及查询

query_deepseek(prompt)

响应是

"Building a web service that uses vector embeddings for search involves several components, including data processing, embedding generation, storage, search, and serving the service via an API. Below is a list of tools and technologies you can use for each step:\n\n---\n\n### 1. **Data Processing**\n - **Python**: For general data preprocessing and scripting.\n - **Pandas**: For handling tabular data.\n - **NumPy**: For numerical operations.\n - **NLTK/Spacy**: For text preprocessing (tokenization, stemming, etc.).\n - **LLM models**: For generating embeddings if you're using pre-trained models.\n\n---\n\n### 2. **Embedding Generation**\n - **Pre-trained Models**:\n - Embeddings (e.g., `text-embedding-ada-002`).\n - Hugging Face Transformers (e.g., `Sentence-BERT`, `all-MiniLM-L6-v2`).\n - Google's Universal Sentence Encoder.\n - **Custom Models**:\n - TensorFlow/PyTorch: For training custom embedding models.\n - **Libraries**:\n - `sentence-transformers`: For generating sentence embeddings.\n - `transformers`: For using Hugging Face models.\n\n---\n\n### 3. **Vector Storage**\n - **Vector Databases**:\n - Pinecone: Managed vector database for similarity search.\n - Weaviate: Open-source vector search engine.\n - Milvus: Open-source vector database.\n - FAISS (Facebook AI Similarity Search): Library for efficient similarity search.\n - Qdrant: Open-source vector search engine.\n - Redis with RedisAI: For storing and querying vectors.\n - **Traditional Databases with Vector Support**:\n - PostgreSQL with pgvector extension.\n - Elasticsearch with dense vector support.\n\n---\n\n### 4. **Search and Retrieval**\n - **Similarity Search Algorithms**:\n - Cosine similarity, Euclidean distance, or dot product for comparing vectors.\n - **Libraries**:\n - FAISS: For fast nearest-neighbor search.\n - Annoy (Approximate Nearest Neighbors Oh Yeah): For approximate nearest neighbor search.\n - **Vector Databases**: Most vector databases (e.g., Pinecone, Weaviate) come with built-in search capabilities.\n\n---\n\n### 5. **Web Service Framework**\n - **Backend Frameworks**:\n - Flask/Django/FastAPI (Python): For building RESTful APIs.\n - Node.js/Express: If you prefer JavaScript.\n - **API Documentation**:\n - Swagger/OpenAPI: For documenting your API.\n - **Authentication**:\n - OAuth2, JWT: For securing your API.\n\n---\n\n### 6. **Deployment**\n - **Containerization**:\n - Docker: For packaging your application.\n - **Orchestration**:\n - Kubernetes: For managing containers at scale.\n - **Cloud Platforms**:\n - AWS (EC2, Lambda, S3).\n - Google Cloud (Compute Engine, Cloud Functions).\n - Azure (App Service, Functions).\n - **Serverless**:\n - AWS Lambda, Google Cloud Functions, or Vercel for serverless deployment.\n\n---\n\n### 7. **Monitoring and Logging**\n - **Monitoring**:\n - Prometheus + Grafana: For monitoring performance.\n - **Logging**:\n - ELK Stack (Elasticsearch, Logstash, Kibana).\n - Fluentd.\n - **Error Tracking**:\n - Sentry.\n\n---\n\n### 8. **Frontend (Optional)**\n - **Frontend Frameworks**:\n - React, Vue.js, or Angular: For building a user interface.\n - **Libraries**:\n - Axios: For making API calls from the frontend.\n\n---\n\n### Example Workflow\n1. Preprocess your data (e.g., clean text, tokenize).\n2. Generate embeddings using a pre-trained model (e.g., Hugging Face).\n3. Store embeddings in a vector database (e.g., Pinecone or FAISS).\n4. Build a REST API using FastAPI or Flask to handle search queries.\n5. Deploy the service using Docker and Kubernetes or a serverless platform.\n6. Monitor and scale the service as needed.\n\n---\n\n### Example Tools Stack\n- **Embedding Generation**: Hugging Face `sentence-transformers`.\n- **Vector Storage**: Pinecone or FAISS.\n- **Web Framework**: FastAPI.\n- **Deployment**: Docker + AWS/GCP.\n\nBy combining these tools, you can build a scalable and efficient web service for vector embedding-based search."

扩展提示

尽管最初的答案听起来可信,但它并未正确回答我们的问题。相反,它给出了一个应用程序堆栈的通用描述。为了改善结果,用可用工具的描述来丰富原始提示似乎是一种可能性。让我们使用语义知识库来用不同技术的描述来增强提示!

results = client.query_points(

collection_name=collection_name,

query=models.Document(text=prompt, model=model_name),

limit=3,

)

results

这是响应

QueryResponse(points=[

ScoredPoint(id=0, version=0, score=0.67437416, payload={'document': 'Qdrant is a vector database & vector similarity search engine. It deploys as an API service providing search for the nearest high-dimensional vectors. With Qdrant, embeddings or neural network encoders can be turned into full-fledged applications for matching, searching, recommending, and much more!'}, vector=None, shard_key=None, order_value=None),

ScoredPoint(id=6, version=0, score=0.63144326, payload={'document': 'SentenceTransformers is a Python framework for state-of-the-art sentence, text and image embeddings. You can use this framework to compute sentence / text embeddings for more than 100 languages. These embeddings can then be compared e.g. with cosine-similarity to find sentences with a similar meaning. This can be useful for semantic textual similar, semantic search, or paraphrase mining.'}, vector=None, shard_key=None, order_value=None),

ScoredPoint(id=5, version=0, score=0.6064749, payload={'document': 'FastAPI is a modern, fast (high-performance), web framework for building APIs with Python 3.7+ based on standard Python type hints.'}, vector=None, shard_key=None, order_value=None)

])

我们使用原始提示对工具描述集执行了语义搜索。现在我们可以使用这些描述来增强提示并创建更多上下文。

context = "\n".join(r.payload['document'] for r in results.points)

context

响应是

'Qdrant is a vector database & vector similarity search engine. It deploys as an API service providing search for the nearest high-dimensional vectors. With Qdrant, embeddings or neural network encoders can be turned into full-fledged applications for matching, searching, recommending, and much more!\nFastAPI is a modern, fast (high-performance), web framework for building APIs with Python 3.7+ based on standard Python type hints.\nPyTorch is a machine learning framework based on the Torch library, used for applications such as computer vision and natural language processing.'

最后,让我们构建一个元提示,它结合了 LLM 假定的角色、原始问题以及我们语义搜索的结果,这将强制我们的 LLM 使用提供的上下文。

通过这样做,我们有效地将知识导向型任务转换为语言任务,并有望减少幻觉的可能性。它也应该使响应听起来更相关。

metaprompt = f"""

You are a software architect.

Answer the following question using the provided context.

If you can't find the answer, do not pretend you know it, but answer "I don't know".

Question: {prompt.strip()}

Context:

{context.strip()}

Answer:

"""

# Look at the full metaprompt

print(metaprompt)

响应

You are a software architect.

Answer the following question using the provided context.

If you can't find the answer, do not pretend you know it, but answer "I don't know".

Question: What tools should I need to use to build a web service using vector embeddings for search?

Context:

Qdrant is a vector database & vector similarity search engine. It deploys as an API service providing search for the nearest high-dimensional vectors. With Qdrant, embeddings or neural network encoders can be turned into full-fledged applications for matching, searching, recommending, and much more!

FastAPI is a modern, fast (high-performance), web framework for building APIs with Python 3.7+ based on standard Python type hints.

PyTorch is a machine learning framework based on the Torch library, used for applications such as computer vision and natural language processing.

Answer:

我们当前的提示要长得多,而且我们还使用了几种策略来使响应更好

- LLM 扮演软件架构师的角色。

- 我们提供更多上下文来回答问题。

- 如果上下文不包含有意义的信息,模型不应编造答案。

让我们看看这是否按预期工作。

问题

query_deepseek(metaprompt)

答案

'To build a web service using vector embeddings for search, you can use the following tools:\n\n1. **Qdrant**: As a vector database and similarity search engine, Qdrant will handle the storage and retrieval of high-dimensional vectors. It provides an API service for searching and matching vectors, making it ideal for applications that require vector-based search functionality.\n\n2. **FastAPI**: This web framework is perfect for building the API layer of your web service. It is fast, easy to use, and based on Python type hints, which makes it a great choice for developing the backend of your service. FastAPI will allow you to expose endpoints that interact with Qdrant for vector search operations.\n\n3. **PyTorch**: If you need to generate vector embeddings from your data (e.g., text, images), PyTorch can be used to create and train neural network models that produce these embeddings. PyTorch is a powerful machine learning framework that supports a wide range of applications, including natural language processing and computer vision.\n\n### Summary:\n- **Qdrant** for vector storage and search.\n- **FastAPI** for building the web service API.\n- **PyTorch** for generating vector embeddings (if needed).\n\nThese tools together provide a robust stack for building a web service that leverages vector embeddings for search functionality.'

测试 RAG 管道

通过利用我们提供的语义上下文,我们的模型在回答问题方面做得更好。让我们将 RAG 封装为一个函数,这样我们就可以更轻松地为不同的提示调用它。

def rag(question: str, n_points: int = 3) -> str:

results = client.query_points(

collection_name=collection_name,

query=models.Document(text=question, model=model_name),

limit=n_points,

)

context = "\n".join(r.payload["document"] for r in results.points)

metaprompt = f"""

You are a software architect.

Answer the following question using the provided context.

If you can't find the answer, do not pretend you know it, but only answer "I don't know".

Question: {question.strip()}

Context:

{context.strip()}

Answer:

"""

return query_deepseek(metaprompt)

现在提问范围更广了。

问题

rag("What can the stack for a web api look like?")

答案

'The stack for a web API can include the following components based on the provided context:\n\n1. **Web Framework**: FastAPI can be used as the web framework for building the API. It is modern, fast, and leverages Python type hints for better development and performance.\n\n2. **Reverse Proxy/Web Server**: NGINX can be used as a reverse proxy or web server to handle incoming HTTP requests, load balancing, and serving static content. It is known for its high performance and low resource consumption.\n\n3. **Containerization**: Docker can be used to containerize the application, making it easier to build, share, and run the API consistently across different environments without worrying about configuration issues.\n\nThis stack provides a robust, scalable, and efficient setup for building and deploying a web API.'

问题

rag("Where is the nearest grocery store?")

答案

"I don't know. The provided context does not contain any information about the location of the nearest grocery store."

我们的模型现在可以

- 利用我们向量数据存储中的知识。

- 根据提供的上下文回答,它无法提供答案。

我们刚刚展示了一种有用的机制来减轻大型语言模型中幻觉的风险。