推理

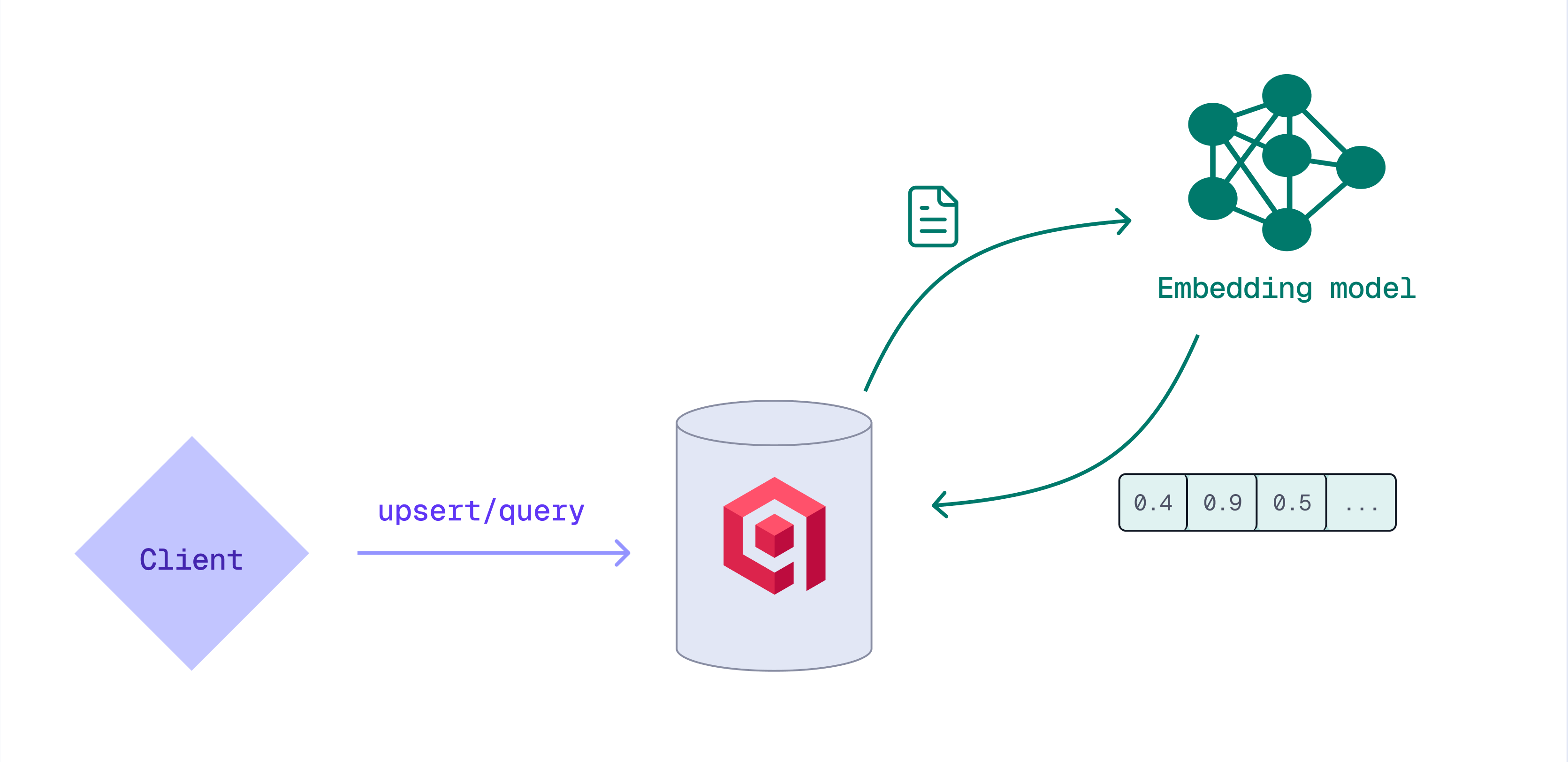

推理是使用机器学习模型从文本、图像或其他数据类型创建向量嵌入的过程。虽然您可以在客户端创建嵌入,但您也可以让 Qdrant 在存储或查询数据时生成它们。

使用 Qdrant 生成嵌入有几个优点:

- 无需外部管道或单独的模型服务器。

- 使用单一统一的 API,而不是每个模型提供商使用不同的 API。

- 没有外部网络调用,最大程度地减少了延迟或数据传输开销。

根据您想要使用的模型,推理可以执行:

- 在客户端,使用 FastEmbed 库

- 由 Qdrant 集群(仅支持 BM25 模型)

- 在 Qdrant Cloud 中,使用 云推理(适用于 Qdrant Managed Cloud 上的集群)

- 外部(OpenAI、Cohere 和 Jina AI 提供的模型;适用于 Qdrant Managed Cloud 上的集群)

推理 API

您可以在可以使用常规向量的任何 API 中使用推理。您可以使用特殊的 *推理对象* 来代替向量。

Document对象,用于文本推理// Document { // Text input text: "Your text", // Name of the model, to do inference with model: "<the-model-to-use>", // Extra parameters for the model, Optional options: {} }Image对象,用于图像推理// Image { // Image input image: "<url>", // Or base64 encoded image // Name of the model, to do inference with model: "<the-model-to-use>", // Extra parameters for the model, Optional options: {} }Object对象,保留用于其他类型的输入,未来可能会实现。

Qdrant API 支持在可以使用常规向量的所有地方使用这些推理对象。例如:

POST /collections/<your-collection>/points/query

{

"query": {

"nearest": [0.12, 0.34, 0.56, 0.78, ...]

}

}

可以替换为

POST /collections/<your-collection>/points/query

{

"query": {

"nearest": {

"text": "My Query Text",

"model": "<the-model-to-use>"

}

}

}

在这种情况下,Qdrant 使用配置的嵌入模型自动从推理对象创建向量,然后使用该向量执行搜索查询。所有这些都发生在低延迟网络中。

服务器端推理:BM25

BM25 (Best Matching 25) 是一种用于文本搜索的排名函数。BM25 使用表示文档的稀疏向量,其中每个维度对应一个单词。Qdrant 可以直接在服务器上从输入文本生成这些稀疏嵌入。

在 upsert 点时,提供文本和 qdrant/bm25 嵌入模型。

PUT /collections/{collection_name}/points

{

"points": [

{

"id": 1,

"vector": {

"my-bm25-vector": {

"text": "Recipe for baking chocolate chip cookies",

"model": "qdrant/bm25"

}

}

}

]

}

from qdrant_client import QdrantClient, models

client = QdrantClient(

url="https://xyz-example.qdrant.io:6333",

api_key="<your-api-key>",

cloud_inference=True

)

client.upsert(

collection_name="{collection_name}",

points=[

models.PointStruct(

id=1,

vector={

"my-bm25-vector": models.Document(

text="Recipe for baking chocolate chip cookies",

model="Qdrant/bm25",

)

},

)

],

)

import { QdrantClient } from "@qdrant/js-client-rest";

const client = new QdrantClient({ host: "localhost", port: 6333 });

client.upsert("{collection_name}", {

points: [

{

id: 1,

vector: {

'my-bm25-vector': {

text: 'Recipe for baking chocolate chip cookies',

model: 'Qdrant/bm25',

},

},

},

],

});

use qdrant_client::{

Payload, Qdrant, QdrantError,

qdrant::{Document, PointStruct, UpsertPointsBuilder},

};

let client = Qdrant::from_url("<your-qdrant-url>").build()?;

client

.upsert_points(UpsertPointsBuilder::new("{collection_name}",

vec![

PointStruct::new(1,

HashMap::from([("my-bm25-vector".to_string(),

Document {

text: "Recipe for baking chocolate chip cookies".into(),

model: "qdrant/bm25".into(),

..Default::default()

}.into())]),

Payload::default())

]))

.await?;

import static io.qdrant.client.PointIdFactory.id;

import static io.qdrant.client.ValueFactory.value;

import static io.qdrant.client.VectorFactory.vector;

import static io.qdrant.client.VectorsFactory.namedVectors;

import io.qdrant.client.QdrantClient;

import io.qdrant.client.QdrantGrpcClient;

import io.qdrant.client.grpc.Points.Image;

import io.qdrant.client.grpc.Points.PointStruct;

import java.util.List;

import java.util.Map;

QdrantClient client =

new QdrantClient(

QdrantGrpcClient.newBuilder("xyz-example.qdrant.io", 6334, true)

.withApiKey("<your-api-key")

.build());

client

.upsertAsync(

"{collection_name}",

List.of(

PointStruct.newBuilder()

.setId(id(1))

.setVectors(

namedVectors(

Map.of(

"my-bm25-vector",

vector(

Document.newBuilder()

.setModel("qdrant/bm25")

.setText("Recipe for baking chocolate chip cookies")

.build()))))

.build()))

.get();

using Qdrant.Client;

using Qdrant.Client.Grpc;

var client = new QdrantClient(

host: "xyz-example.qdrant.io", port: 6334, https: true, apiKey: "<your-api-key>");

await client.UpsertAsync(

collectionName: "{collection_name}",

points: new List<PointStruct>

{

new()

{

Id = 1,

Vectors = new Dictionary<string, Vector>

{

["my-bm25-vector"] = new Document()

{

Model = "qdrant/bm25",

Text = "Recipe for baking chocolate chip cookies",

},

},

},

}

);

import (

"context"

"time"

"github.com/qdrant/go-client/qdrant"

)

client, err := qdrant.NewClient(&qdrant.Config{

Host: "xyz-example.qdrant.io",

Port: 6334,

APIKey: "<paste-your-api-key-here>",

UseTLS: true,

})

client.Upsert(ctx, &qdrant.UpsertPoints{

CollectionName: "{collection_name}",

Points: []*qdrant.PointStruct{

{

Id: qdrant.NewIDNum(uint64(1)),

Vectors: qdrant.NewVectorsMap(map[string]*qdrant.Vector{

"my-bm25-vector": qdrant.NewVectorDocument(&qdrant.Document{

Model: "qdrant/bm25",

Text: "Recipe for baking chocolate chip cookies",

}),

}),

},

},

})

Qdrant 使用该模型生成嵌入,并使用生成的向量存储点。检索点会显示生成的嵌入。

....

"my-bm25-vector": {

"indices": [

112174620,

177304315,

662344706,

771857363,

1617337648

],

"values": [

1.6697302,

1.6697302,

1.6697302,

1.6697302,

1.6697302

]

}

....

]

同样,您可以通过提供要查询的文本和嵌入模型,在查询时使用推理。

POST /collections/{collection_name}/points/query

{

"query": {

"text": "How to bake cookies?",

"model": "qdrant/bm25"

},

"using": "my-bm25-vector"

}

from qdrant_client import QdrantClient, models

client = QdrantClient(

url="https://xyz-example.qdrant.io:6333",

api_key="<your-api-key>",

cloud_inference=True

)

client.query_points(

collection_name="{collection_name}",

query=models.Document(

text="How to bake cookies?",

model="Qdrant/bm25",

),

using="my-bm25-vector",

)

import { QdrantClient } from "@qdrant/js-client-rest";

const client = new QdrantClient({ host: "localhost", port: 6333 });

client.query("{collection_name}", {

query: {

text: 'How to bake cookies?',

model: 'qdrant/bm25',

},

using: 'my-bm25-vector',

});

use qdrant_client::{

Qdrant, QdrantError,

qdrant::{Document, Query, QueryPointsBuilder},

};

let client = Qdrant::from_url("<your-qdrant-url>").build().unwrap();

client

.query(

QueryPointsBuilder::new("{collection_name}")

.query(Query::new_nearest(Document {

text: "How to bake cookies?".into(),

model: "qdrant/bm25".into(),

..Default::default()

}))

.using("my-bm25-vector")

.build(),

)

.await?;

import static io.qdrant.client.QueryFactory.nearest;

import io.qdrant.client.QdrantClient;

import io.qdrant.client.QdrantGrpcClient;

import io.qdrant.client.grpc.Points;

import io.qdrant.client.grpc.Points.Document;

QdrantClient client =

new QdrantClient(

QdrantGrpcClient.newBuilder("xyz-example.qdrant.io", 6334, true)

.withApiKey("<your-api-key")

.build());

client

.queryAsync(

Points.QueryPoints.newBuilder()

.setCollectionName("{collection_name}")

.setQuery(

nearest(

Document.newBuilder()

.setModel("qdrant/bm25")

.setText("How to bake cookies?")

.build()))

.setUsing("my-bm25-vector")

.build())

.get();

using Qdrant.Client;

using Qdrant.Client.Grpc;

var client = new QdrantClient(

host: "xyz-example.qdrant.io",

port: 6334,

https: true,

apiKey: "<your-api-key>"

);

await client.QueryAsync(

collectionName: "{collection_name}",

query: new Document() { Model = "qdrant/bm25", Text = "How to bake cookies?" },

usingVector: "my-bm25-vector"

);

import (

"context"

"time"

"github.com/qdrant/go-client/qdrant"

)

client, err := qdrant.NewClient(&qdrant.Config{

Host: "xyz-example.qdrant.io",

Port: 6334,

APIKey: "<paste-your-api-key-here>",

UseTLS: true,

})

client.Query(ctx, &qdrant.QueryPoints{

CollectionName: "{collection_name}",

Query: qdrant.NewQueryNearest(

qdrant.NewVectorInputDocument(&qdrant.Document{

Model: "qdrant/bm25",

Text: "How to bake cookies?",

}),

),

Using: qdrant.PtrOf("my-bm25-vector"),

})

Qdrant 云推理

Qdrant Managed Cloud 上的集群可以访问 托管在 Qdrant Cloud 上 的嵌入模型。有关可用模型的列表,请访问 Qdrant Cloud Console 中集群详细信息页面的“推理”选项卡。您还可以在此处为集群启用云推理(如果尚未启用)。

在使用云托管的嵌入模型之前,请确保您的集合已配置为具有正确维度的向量。Qdrant Cloud Console 中集群详细信息页面的“推理”选项卡列出了每个支持的嵌入模型的维度。

文本推理

让我们考虑一个使用云推理和生成密集向量的文本模型的示例。此示例创建一个点,并使用包含 Document 推理对象的简单搜索查询。

# Insert new points with cloud-side inference

PUT /collections/<your-collection>/points?wait=true

{

"points": [

{

"id": 1,

"payload": { "topic": "cooking", "type": "dessert" },

"vector": {

"text": "Recipe for baking chocolate chip cookies",

"model": "<the-model-to-use>"

}

}

]

}

# Search in the collection using cloud-side inference

POST /collections/<your-collection>/points/query

{

"query": {

"text": "How to bake cookies?",

"model": "<the-model-to-use>"

}

}

# Create a new vector

curl -X PUT "https://xyz-example.qdrant.io:6333/collections/<your-collection>/points?wait=true" \

-H "Content-Type: application/json" \

-H "api-key: <paste-your-api-key-here>" \

-d '{

"points": [

{

"id": 1,

"payload": { "topic": "cooking", "type": "dessert" },

"vector": {

"text": "Recipe for baking chocolate chip cookies",

"model": "<the-model-to-use>"

}

}

]

}'

# Perform a search query

curl -X POST "https://xyz-example.qdrant.io:6333/collections/<your-collection>/points/query" \

-H "Content-Type: application/json" \

-H "api-key: <paste-your-api-key-here>" \

-d '{

"query": {

"text": "How to bake cookies?",

"model": "<the-model-to-use>"

}

}'

from qdrant_client import QdrantClient

from qdrant_client.models import PointStruct, Document

client = QdrantClient(

url="https://xyz-example.qdrant.io:6333",

api_key="<paste-your-api-key-here>",

# IMPORTANT

# If not enabled, inference will be performed locally

cloud_inference=True,

)

points = [

PointStruct(

id=1,

payload={"topic": "cooking", "type": "dessert"},

vector=Document(

text="Recipe for baking chocolate chip cookies",

model="<the-model-to-use>"

)

)

]

client.upsert(collection_name="<your-collection>", points=points)

result = client.query_points(

collection_name="<your-collection>",

query=Document(

text="How to bake cookies?",

model="<the-model-to-use>"

)

)

print(result)

import {QdrantClient} from "@qdrant/js-client-rest";

const client = new QdrantClient({

url: 'https://xyz-example.qdrant.io:6333',

apiKey: '<paste-your-api-key-here>',

});

const points = [

{

id: 1,

payload: { topic: "cooking", type: "dessert" },

vector: {

text: "Recipe for baking chocolate chip cookies",

model: "<the-model-to-use>"

}

}

];

await client.upsert("<your-collection>", { wait: true, points });

const result = await client.query(

"<your-collection>",

{

query: {

text: "How to bake cookies?",

model: "<the-model-to-use>"

},

}

)

console.log(result);

use qdrant_client::qdrant::Query;

use qdrant_client::qdrant::QueryPointsBuilder;

use qdrant_client::Payload;

use qdrant_client::Qdrant;

use qdrant_client::qdrant::{Document};

use qdrant_client::qdrant::{PointStruct, UpsertPointsBuilder};

#[tokio::main]

async fn main() {

let client = Qdrant::from_url("https://xyz-example.qdrant.io:6334")

.api_key("<paste-your-api-key-here>")

.build()

.unwrap();

let points = vec![

PointStruct::new(

1,

Document::new(

"Recipe for baking chocolate chip cookies",

"<the-model-to-use>"

),

Payload::try_from(serde_json::json!(

{"topic": "cooking", "type": "dessert"}

)).unwrap(),

)

];

let upsert_request = UpsertPointsBuilder::new(

"<your-collection>",

points

).wait(true);

let _ = client.upsert_points(upsert_request).await;

let query_document = Document::new(

"How to bake cookies?",

"<the-model-to-use>"

);

let query_request = QueryPointsBuilder::new("<your-collection>")

.query(Query::new_nearest(query_document));

let result = client.query(query_request).await.unwrap();

println!("Result: {:?}", result);

}

package org.example;

import static io.qdrant.client.PointIdFactory.id;

import static io.qdrant.client.QueryFactory.nearest;

import static io.qdrant.client.ValueFactory.value;

import static io.qdrant.client.VectorsFactory.vectors;

import io.qdrant.client.grpc.Points;

import io.qdrant.client.grpc.Points.Document;

import io.qdrant.client.grpc.Points.PointStruct;

import java.util.List;

import java.util.Map;

import java.util.concurrent.ExecutionException;

public class Main {

public static void main(String[] args)

throws ExecutionException, InterruptedException {

QdrantClient client =

new QdrantClient(

QdrantGrpcClient.newBuilder("xyz-example.qdrant.io", 6334, true)

.withApiKey("<paste-your-api-key-here>")

.build());

client

.upsertAsync(

"<your-collection>",

List.of(

PointStruct.newBuilder()

.setId(id(1))

.setVectors(

vectors(

Document.newBuilder()

.setText("Recipe for baking chocolate chip cookies")

.setModel("<the-model-to-use>")

.build()))

.putAllPayload(Map.of("topic", value("cooking"), "type", value("dessert")))

.build()))

.get();

List <Points.ScoredPoint> points =

client

.queryAsync(

Points.QueryPoints.newBuilder()

.setCollectionName("<your-collection>")

.setQuery(

nearest(

Document.newBuilder()

.setText("How to bake cookies?")

.setModel("<the-model-to-use>")

.build()))

.build())

.get();

System.out.printf(points.toString());

}

}

using Qdrant.Client;

using Qdrant.Client.Grpc;

using Value = Qdrant.Client.Grpc.Value;

var client = new QdrantClient(

host: "xyz-example.qdrant.io",

port: 6334,

https: true,

apiKey: "<paste-your-api-key-here>"

);

await client.UpsertAsync(

collectionName: "<your-collection>",

points: new List <PointStruct> {

new() {

Id = 1,

Vectors = new Document() {

Text = "Recipe for baking chocolate chip cookies",

Model = "<the-model-to-use>",

},

Payload = {

["topic"] = "cooking",

["type"] = "dessert"

},

},

}

);

var points = await client.QueryAsync(

collectionName: "<your-collection>",

query: new Document() {

Text = "How to bake cookies?",

Model = "<the-model-to-use>"

}

);

foreach(var point in points) {

Console.WriteLine(point);

}

package main

import (

"context"

"log"

"time"

"github.com/qdrant/go-client/qdrant"

)

func main() {

ctx, cancel := context.WithTimeout(context.Background(), time.Second)

defer cancel()

client, err := qdrant.NewClient(&qdrant.Config{

Host: "xyz-example.qdrant.io",

Port: 6334,

APIKey: "<paste-your-api-key-here>",

UseTLS: true,

})

if err != nil {

log.Fatalf("did not connect: %v", err)

}

defer client.Close()

_, err = client.Upsert(ctx, &qdrant.UpsertPoints{

CollectionName: "<your-collection>",

Points: []*qdrant.PointStruct{

{

Id: qdrant.NewIDNum(1),

Vectors: qdrant.NewVectorsDocument(&qdrant.Document{

Text: "Recipe for baking chocolate chip cookies",

Model: "<the-model-to-use>",

}),

Payload: qdrant.NewValueMap(map[string]any{

"topic": "cooking",

"type": "dessert",

}),

},

},

})

if err != nil {

log.Fatalf("error creating point: %v", err)

}

points, err := client.Query(ctx, &qdrant.QueryPoints{

CollectionName: "<your-collection>",

Query: qdrant.NewQueryNearest(

qdrant.NewVectorInputDocument(&qdrant.Document{

Text: "How to bake cookies?",

Model: "<the-model-to-use>",

}),

),

})

log.Printf("List of points: %s", points)

}

特定于每个集群和模型的用法示例也可以在 Qdrant Cloud Console 中集群详细信息页面的“推理”选项卡中找到。

请注意,每个模型都有一个上下文窗口,即模型在单个请求中可以处理的最大 token 数。如果输入文本超出上下文窗口,它将被截断以适应限制。上下文窗口大小显示在集群详细信息页面的“推理”选项卡中。

对于密集向量模型,您还需要确保集合中配置的向量大小与模型的输出大小匹配。如果向量大小不匹配,则 upsert 将失败并显示错误。

图像推理

这是使用云推理和图像模型的另一个示例。此示例使用 CLIP 模型对图像进行编码,然后使用文本查询搜索该图像。

由于 CLIP 模型是多模态的,我们可以在同一个向量字段上使用图像和文本输入。

# Insert new points with cloud-side inference

PUT /collections/<your-collection>/points?wait=true

{

"points": [

{

"id": 1,

"vector": {

"image": "https://qdrant.org.cn/example.png",

"model": "qdrant/clip-vit-b-32-vision"

},

"payload": {

"title": "Example Image"

}

}

]

}

# Search in the collection using cloud-side inference

POST /collections/<your-collection>/points/query

{

"query": {

"text": "Mission to Mars",

"model": "qdrant/clip-vit-b-32-text"

}

}

# Create a new vector

curl -X PUT "https://xyz-example.qdrant.io:6333/collections/<your-collection>/points?wait=true" \

-H "Content-Type: application/json" \

-H "api-key: <paste-your-api-key-here>" \

-d '{

"points": [

{

"id": 1,

"vector": {

"image": "https://qdrant.org.cn/example.png",

"model": "qdrant/clip-vit-b-32-vision"

},

"payload": {

"title": "Example Image"

}

}

]

}'

# Perform a search query

curl -X POST "https://xyz-example.qdrant.io:6333/collections/<your-collection>/points/query" \

-H "Content-Type: application/json" \

-H "api-key: <paste-your-api-key-here>" \

-d '{

"query": {

"text": "Mission to Mars",

"model": "qdrant/clip-vit-b-32-text"

}

}'

from qdrant_client import QdrantClient

from qdrant_client.models import PointStruct, Image, Document

client = QdrantClient(

url="https://xyz-example.qdrant.io:6333",

api_key="<paste-your-api-key-here>",

# IMPORTANT

# If not enabled, inference will be performed locally

cloud_inference=True,

)

points = [

PointStruct(

id=1,

vector=Image(

image="https://qdrant.org.cn/example.png",

model="qdrant/clip-vit-b-32-vision"

),

payload={

"title": "Example Image"

}

)

]

client.upsert(collection_name="<your-collection>", points=points)

result = client.query_points(

collection_name="<your-collection>",

query=Document(

text="Mission to Mars",

model="qdrant/clip-vit-b-32-text"

)

)

print(result)

import {QdrantClient} from "@qdrant/js-client-rest";

const client = new QdrantClient({

url: 'https://xyz-example.qdrant.io:6333',

apiKey: '<paste-your-api-key-here>',

});

const points = [

{

id: 1,

vector: {

image: "https://qdrant.org.cn/example.png",

model: "qdrant/clip-vit-b-32-vision"

},

payload: {

title: "Example Image"

}

}

];

await client.upsert("<your-collection>", { wait: true, points });

const result = await client.query(

"<your-collection>",

{

query: {

text: "Mission to Mars",

model: "qdrant/clip-vit-b-32-text"

},

}

)

console.log(result);

use qdrant_client::qdrant::Query;

use qdrant_client::qdrant::QueryPointsBuilder;

use qdrant_client::Payload;

use qdrant_client::Qdrant;

use qdrant_client::qdrant::{Document, Image};

use qdrant_client::qdrant::{PointStruct, UpsertPointsBuilder};

#[tokio::main]

async fn main() {

let client = Qdrant::from_url("https://xyz-example.qdrant.io:6334")

.api_key("<paste-your-api-key-here>")

.build()

.unwrap();

let points = vec![

PointStruct::new(

1,

Image::new_from_url(

"https://qdrant.org.cn/example.png",

"qdrant/clip-vit-b-32-vision"

),

Payload::try_from(serde_json::json!({

"title": "Example Image"

})).unwrap(),

)

];

let upsert_request = UpsertPointsBuilder::new(

"<your-collection>",

points

).wait(true);

let _ = client.upsert_points(upsert_request).await;

let query_document = Document::new(

"Mission to Mars",

"qdrant/clip-vit-b-32-text"

);

let query_request = QueryPointsBuilder::new("<your-collection>")

.query(Query::new_nearest(query_document));

let result = client.query(query_request).await.unwrap();

println!("Result: {:?}", result);

}

package org.example;

import static io.qdrant.client.PointIdFactory.id;

import static io.qdrant.client.QueryFactory.nearest;

import static io.qdrant.client.ValueFactory.value;

import static io.qdrant.client.VectorsFactory.vectors;

import io.qdrant.client.grpc.Points;

import io.qdrant.client.grpc.Points.Document;

import io.qdrant.client.grpc.Points.Image;

import io.qdrant.client.grpc.Points.PointStruct;

import java.util.List;

import java.util.Map;

import java.util.concurrent.ExecutionException;

public class Main {

public static void main(String[] args)

throws ExecutionException, InterruptedException {

QdrantClient client =

new QdrantClient(

QdrantGrpcClient.newBuilder("xyz-example.qdrant.io", 6334, true)

.withApiKey("<paste-your-api-key-here>")

.build());

client

.upsertAsync(

"<your-collection>",

List.of(

PointStruct.newBuilder()

.setId(id(1))

.setVectors(

vectors(

Image.newBuilder()

.setImage(value("https://qdrant.org.cn/example.png"))

.setModel("qdrant/clip-vit-b-32-vision")

.build()))

.putAllPayload(Map.of("title", value("Example Image")))

.build()))

.get();

List <Points.ScoredPoint> points =

client

.queryAsync(

Points.QueryPoints.newBuilder()

.setCollectionName("<your-collection>")

.setQuery(

nearest(

Document.newBuilder()

.setText("Mission to Mars")

.setModel("qdrant/clip-vit-b-32-text")

.build()))

.build())

.get();

System.out.printf(points.toString());

}

}

using Qdrant.Client;

using Qdrant.Client.Grpc;

using Value = Qdrant.Client.Grpc.Value;

var client = new QdrantClient(

host: "xyz-example.qdrant.io",

port: 6334,

https: true,

apiKey: "<paste-your-api-key-here>"

);

await client.UpsertAsync(

collectionName: "<your-collection>",

points: new List <PointStruct> {

new() {

Id = 1,

Vectors = new Image() {

Image_ = "https://qdrant.org.cn/example.png",

Model = "qdrant/clip-vit-b-32-vision",

},

Payload = {

["title"] = "Example Image"

},

},

}

);

var points = await client.QueryAsync(

collectionName: "<your-collection>",

query: new Document() {

Text = "Mission to Mars",

Model = "qdrant/clip-vit-b-32-text"

}

);

foreach(var point in points) {

Console.WriteLine(point);

}

package main

import (

"context"

"log"

"time"

"github.com/qdrant/go-client/qdrant"

)

func main() {

ctx, cancel := context.WithTimeout(context.Background(), time.Second)

defer cancel()

client, err := qdrant.NewClient(&qdrant.Config{

Host: "xyz-example.qdrant.io",

Port: 6334,

APIKey: "<paste-your-api-key-here>",

UseTLS: true,

})

if err != nil {

log.Fatalf("did not connect: %v", err)

}

defer client.Close()

_, err = client.Upsert(ctx, &qdrant.UpsertPoints{

CollectionName: "<your-collection>",

Points: []*qdrant.PointStruct{

{

Id: qdrant.NewIDNum(1),

Vectors: qdrant.NewVectorsImage(&qdrant.Image{

Model: "qdrant/clip-vit-b-32-vision",

Image: qdrant.NewValueString("https://qdrant.org.cn/example.png"),

}),

Payload: qdrant.NewValueMap(map[string]any{

"title": "Example image",

}),

},

},

})

if err != nil {

log.Fatalf("error creating point: %v", err)

}

points, err := client.Query(ctx, &qdrant.QueryPoints{

CollectionName: "<your-collection>",

Query: qdrant.NewQueryNearest(

qdrant.NewVectorInputDocument(&qdrant.Document{

Text: "Mission to Mars",

Model: "qdrant/clip-vit-b-32-text",

}),

),

})

log.Printf("List of points: %s", points)

}

Qdrant Cloud 推理服务器将使用提供的 URL 下载图像。或者,您可以将图像作为 base64 编码的字符串提供。每个模型对可以使用的文件大小和扩展名都有限制。有关详细信息,请参阅模型卡。

本地推理兼容性

Python SDK 提供了一个独特的功能:它通过相同的接口支持 本地 和云推理。

您可以通过在初始化 QdrantClient 时设置 cloud_inference 标志,轻松地在本地推理和云推理之间切换。例如:

client = QdrantClient(

url="https://your-cluster.qdrant.io",

api_key="<your-api-key>",

cloud_inference=True, # Set to False to use local inference

)

这种灵活性允许您在本地或持续集成 (CI) 环境中开发和测试您的应用程序,而无需访问云推理资源。

- 当

cloud_inference设置为False时,推理使用fastembed在本地执行。 - 当设置为

True时,推理请求由 Qdrant Cloud 处理。

外部嵌入模型提供商

Qdrant Cloud 可以充当三个外部嵌入模型提供商的 API 代理:

- OpenAI

- Cohere

- Jina AI

这使您可以通过 Qdrant API 访问这些提供商提供的任何嵌入模型。

要使用外部提供商的嵌入模型,您需要该提供商的 API 密钥。例如,要访问 OpenAI 模型,您需要一个 OpenAI API 密钥。Qdrant 不存储或缓存您的 API 密钥;它们必须随每个推理请求提供。

使用外部嵌入模型时,请确保您的集合已配置为具有正确维度的向量。有关输出维度的详细信息,请参阅模型的文档。

OpenAI

当您在模型名称前加上 openai/ 时,嵌入请求会自动路由到 OpenAI 嵌入 API。

例如,在摄取数据时使用 OpenAI 的 text-embedding-3-large 模型,在模型名称前加上 openai/ 并在 options 对象中提供您的 OpenAI API 密钥。此示例使用 OpenAI 特定的 API dimensions 参数将维度减少到 512。

PUT /collections/{collection_name}/points?wait=true

{

"points": [

{

"id": 1,

"vector": {

"text": "Recipe for baking chocolate chip cookies",

"model": "openai/text-embedding-3-large",

"options": {

"openai-api-key": "<YOUR_OPENAI_API_KEY>",

"dimensions": 512

}

}

}

]

}

from qdrant_client import QdrantClient, models

client = QdrantClient(

url="https://xyz-example.qdrant.io:6333",

api_key="<your-api-key>",

cloud_inference=True

)

client.upsert(

collection_name="{collection_name}",

points=[

models.PointStruct(

id=1,

vector=models.Document(

text="Recipe for baking chocolate chip cookies",

model="openai/text-embedding-3-large",

options={

"openai-api-key": "<your_openai_api_key>",

"dimensions": 512

}

)

)

]

)

import { QdrantClient } from "@qdrant/js-client-rest";

const client = new QdrantClient({ host: "localhost", port: 6333 });

client.upsert("{collection_name}", {

points: [

{

id: 1,

vector: {

text: 'Recipe for baking chocolate chip cookies',

model: 'openai/text-embedding-3-large',

options: {

'openai-api-key': '<your_openai_api_key>',

dimensions: 512,

},

},

},

],

});

use qdrant_client::{

Payload, Qdrant, QdrantError,

qdrant::{Document, PointStruct, UpsertPointsBuilder},

};

use std::collections::HashMap;

let client = Qdrant::from_url("<your-qdrant-url>").build()?;

let mut options = HashMap::new();

options.insert("openai-api-key".to_string(), "<YOUR_OPENAI_API_KEY>".into());

options.insert("dimensions".to_string(), 512.into());

client

.upsert_points(UpsertPointsBuilder::new("{collection_name}",

vec![

PointStruct::new(1,

Document {

text: "Recipe for baking chocolate chip cookies".into(),

model: "openai/text-embedding-3-large".into(),

options,

},

Payload::default())

]).wait(true))

.await?;

import static io.qdrant.client.PointIdFactory.id;

import static io.qdrant.client.ValueFactory.value;

import static io.qdrant.client.VectorsFactory.vectors;

import io.qdrant.client.QdrantClient;

import io.qdrant.client.QdrantGrpcClient;

import io.qdrant.client.grpc.Points.Document;

import io.qdrant.client.grpc.Points.PointStruct;

import java.util.List;

import java.util.Map;

QdrantClient client =

new QdrantClient(

QdrantGrpcClient.newBuilder("xyz-example.qdrant.io", 6334, true)

.withApiKey("<your-api-key")

.build());

client

.upsertAsync(

"{collection_name}",

List.of(

PointStruct.newBuilder()

.setId(id(1))

.setVectors(

vectors(

Document.newBuilder()

.setModel("openai/text-embedding-3-large")

.setText("Recipe for baking chocolate chip cookies")

.putAllOptions(

Map.of(

"openai-api-key",

value("<YOUR_OPENAI_API_KEY>"),

"dimensions",

value(512)))

.build()))

.build()))

.get();

using Qdrant.Client;

using Qdrant.Client.Grpc;

var client = new QdrantClient(

host: "xyz-example.qdrant.io", port: 6334, https: true, apiKey: "<your-api-key>");

await client.UpsertAsync(

collectionName: "{collection_name}",

points: new List<PointStruct>

{

new()

{

Id = 1,

Vectors = new Document()

{

Model = "openai/text-embedding-3-large",

Text = "Recipe for baking chocolate chip cookies",

Options = { ["openai-api-key"] = "<YOUR_OPENAI_API_KEY>", ["dimensions"] = 512 },

},

},

}

);

import (

"context"

"time"

"github.com/qdrant/go-client/qdrant"

)

client, err := qdrant.NewClient(&qdrant.Config{

Host: "xyz-example.qdrant.io",

Port: 6334,

APIKey: "<paste-your-api-key-here>",

UseTLS: true,

})

client.Upsert(ctx, &qdrant.UpsertPoints{

CollectionName: "{collection_name}",

Points: []*qdrant.PointStruct{

{

Id: qdrant.NewIDNum(uint64(1)),

Vectors: qdrant.NewVectorsDocument(&qdrant.Document{

Model: "openai/text-embedding-3-large",

Text: "Recipe for baking chocolate chip cookies",

Options: qdrant.NewValueMap(map[string]any{

"openai-api-key": "<YOUR_OPENAI_API_KEY>",

"dimensions": 512,

}),

}),

},

},

})

在查询时,您可以通过在模型名称前加上 openai/ 并在 options 对象中提供您的 OpenAI API 密钥来使用相同的模型。此示例再次使用 OpenAI 特定的 API dimensions 参数将维度减少到 512。

POST /collections/{collection_name}/points/query

{

"query": {

"text": "How to bake cookies?",

"model": "openai/text-embedding-3-large",

"options": {

"openai-api-key": "<YOUR_OPENAI_API_KEY>",

"dimensions": 512

}

}

}

from qdrant_client import QdrantClient, models

client = QdrantClient(

url="https://xyz-example.qdrant.io:6333",

api_key="<your-api-key>",

cloud_inference=True

)

client.query_points(

collection_name="{collection_name}",

query=models.Document(

text="How to bake cookies?",

model="openai/text-embedding-3-large",

options={

"openai-api-key": "<your_openai_api_key>",

"dimensions": 512

}

)

)

import { QdrantClient } from "@qdrant/js-client-rest";

const client = new QdrantClient({ host: "localhost", port: 6333 });

client.query("{collection_name}", {

query: {

text: 'How to bake cookies?',

model: 'openai/text-embedding-3-large',

options: {

'openai-api-key': '<your_openai_api_key>',

dimensions: 512,

},

},

});

use qdrant_client::{

Qdrant, QdrantError,

qdrant::{Document, Query, QueryPointsBuilder, Value},

};

use std::collections::HashMap;

let client = Qdrant::from_url("<your-qdrant-url>").build().unwrap();

let mut options = HashMap::<String, Value>::new();

options.insert("openai-api-key".to_string(), "<YOUR_OPENAI_API_KEY>".into());

options.insert("dimensions".to_string(), 512.into());

client

.query(

QueryPointsBuilder::new("{collection_name}")

.query(Query::new_nearest(Document {

text: "How to bake cookies?".into(),

model: "openai/text-embedding-3-large".into(),

options,

}))

.build(),

)

.await?;

import static io.qdrant.client.QueryFactory.nearest;

import static io.qdrant.client.ValueFactory.value;

import io.qdrant.client.QdrantClient;

import io.qdrant.client.QdrantGrpcClient;

import io.qdrant.client.grpc.Points.Document;

import java.util.Map;

QdrantClient client =

new QdrantClient(

QdrantGrpcClient.newBuilder("xyz-example.qdrant.io", 6334, true)

.withApiKey("<your-api-key")

.build());

client

.queryAsync(

Points.QueryPoints.newBuilder()

.setCollectionName("{collection_name}")

.setQuery(

nearest(

Document.newBuilder()

.setModel("openai/text-embedding-3-large")

.setText("How to bake cookies?")

.putAllOptions(

Map.of(

"openai-api-key",

value("<YOUR_OPENAI_API_KEY>"),

"dimensions",

value(512)))

.build()))

.build())

.get();

using Qdrant.Client;

using Qdrant.Client.Grpc;

var client = new QdrantClient(

host: "xyz-example.qdrant.io",

port: 6334,

https: true,

apiKey: "<your-api-key>"

);

await client.QueryAsync(

collectionName: "{collection_name}",

query: new Document()

{

Model = "openai/text-embedding-3-large",

Text = "How to bake cookies?",

Options = { ["openai-api-key"] = "<YOUR_OPENAI_API_KEY>", ["dimensions"] = 512 },

}

);

import (

"context"

"time"

"github.com/qdrant/go-client/qdrant"

)

client, err := qdrant.NewClient(&qdrant.Config{

Host: "xyz-example.qdrant.io",

Port: 6334,

APIKey: "<paste-your-api-key-here>",

UseTLS: true,

})

client.Query(ctx, &qdrant.QueryPoints{

CollectionName: "{collection_name}",

Query: qdrant.NewQueryNearest(

qdrant.NewVectorInputDocument(&qdrant.Document{

Model: "openai/text-embedding-3-large",

Text: "How to bake cookies?",

Options: qdrant.NewValueMap(map[string]any{

"openai-api-key": "<YOUR_OPENAI_API_KEY>",

"dimensions": 512,

}),

}),

),

})

请注意,由于 Qdrant 不存储或缓存您的 OpenAI API 密钥,因此您需要为每个推理请求提供它。

Cohere

当您在模型名称前加上 cohere/ 时,嵌入请求会自动路由到 Cohere Embed API。

例如,在摄取数据时使用 Cohere 的多模态 embed-v4.0 模型,在模型名称前加上 cohere/ 并在 options 对象中提供您的 Cohere API 密钥。此示例使用 Cohere 特定的 API output_dimension 参数将维度减少到 512。

PUT /collections/{collection_name}/points?wait=true

{

"points": [

{

"id": 1,

"vector": {

"image": "data:image/png;base64,iVBORw0KGgoAAAANSUhEUgAAAAoAAAAKCAYAAACNMs+9AAAAFUlEQVR42mNk+M9Qz0AEYBxVSF+FAAhKDveksOjmAAAAAElFTkSuQmCC",

"model": "cohere/embed-v4.0",

"options": {

"cohere-api-key": "<YOUR_COHERE_API_KEY>",

"output_dimension": 512

}

}

}

]

}

from qdrant_client import QdrantClient, models

client = QdrantClient(

url="https://xyz-example.qdrant.io:6333",

api_key="<your-api-key>",

cloud_inference=True

)

client.upsert(

collection_name="{collection_name}",

points=[

models.PointStruct(

id=1,

vector=models.Document(

text="a green square",

model="cohere/embed-v4.0",

options={

"cohere-api-key": "<your_cohere_api_key>",

"output_dimension": 512

}

)

)

]

)

import { QdrantClient } from "@qdrant/js-client-rest";

const client = new QdrantClient({ host: "localhost", port: 6333 });

client.upsert("{collection_name}", {

points: [

{

id: 1,

vector: {

text: 'a green square',

model: 'cohere/embed-v4.0',

options: {

'cohere-api-key': '<your_cohere_api_key>',

output_dimension: 512,

},

},

},

],

});

use qdrant_client::{

Payload, Qdrant, QdrantError,

qdrant::{Document, PointStruct, UpsertPointsBuilder},

};

use std::collections::HashMap;

let client = Qdrant::from_url("<your-qdrant-url>").build()?;

let mut options = HashMap::new();

options.insert("cohere-api-key".to_string(), "<YOUR_COHERE_API_KEY>".into());

options.insert("output_dimension".to_string(), 512.into());

client

.upsert_points(UpsertPointsBuilder::new("{collection_name}",

vec![

PointStruct::new(1,

Document {

text: "Recipe for baking chocolate chip cookies requires flour, sugar, eggs, and chocolate chips.".into(),

model: "openai/text-embedding-3-small".into(),

options,

},

Payload::default())

]).wait(true))

.await?;

import static io.qdrant.client.PointIdFactory.id;

import static io.qdrant.client.ValueFactory.value;

import static io.qdrant.client.VectorsFactory.vectors;

import io.qdrant.client.QdrantClient;

import io.qdrant.client.QdrantGrpcClient;

import io.qdrant.client.grpc.Points.Image;

import io.qdrant.client.grpc.Points.PointStruct;

import java.util.List;

import java.util.Map;

QdrantClient client =

new QdrantClient(

QdrantGrpcClient.newBuilder("xyz-example.qdrant.io", 6334, true)

.withApiKey("<your-api-key")

.build());

client

.upsertAsync(

"{collection_name}",

List.of(

PointStruct.newBuilder()

.setId(id(1))

.setVectors(

vectors(

Image.newBuilder()

.setModel("cohere/embed-v4.0")

.setImage(

value(

"data:image/png;base64,iVBORw0KGgoAAAANSUhEUgAAAAoAAAAKCAYAAACNMs+9AAAAFUlEQVR42mNk+M9Qz0AEYBxVSF+FAAhKDveksOjmAAAAAElFTkSuQmCC"))

.putAllOptions(

Map.of(

"cohere-api-key",

value("<YOUR_COHERE_API_KEY>"),

"output_dimension",

value(512)))

.build()))

.build()))

.get();

using Qdrant.Client;

using Qdrant.Client.Grpc;

var client = new QdrantClient(

host: "xyz-example.qdrant.io", port: 6334, https: true, apiKey: "<your-api-key>");

await client.UpsertAsync(

collectionName: "{collection_name}",

points: new List<PointStruct>

{

new()

{

Id = 1,

Vectors = new Image()

{

Model = "cohere/embed-v4.0",

Image_ =

"data:image/png;base64,iVBORw0KGgoAAAANSUhEUgAAAAoAAAAKCAYAAACNMs+9AAAAFUlEQVR42mNk+M9Qz0AEYBxVSF+FAAhKDveksOjmAAAAAElFTkSuQmCC",

Options =

{

["cohere-api-key"] = "<YOUR_COHERE_API_KEY>",

["output_dimension"] = 512,

},

},

},

}

);

import (

"context"

"time"

"github.com/qdrant/go-client/qdrant"

)

client, err := qdrant.NewClient(&qdrant.Config{

Host: "xyz-example.qdrant.io",

Port: 6334,

APIKey: "<paste-your-api-key-here>",

UseTLS: true,

})

client.Upsert(ctx, &qdrant.UpsertPoints{

CollectionName: "{collection_name}",

Points: []*qdrant.PointStruct{

{

Id: qdrant.NewIDNum(uint64(1)),

Vectors: qdrant.NewVectorsImage(&qdrant.Image{

Model: "cohere/embed-v4.0",

Image: qdrant.NewValueString("data:image/png;base64,iVBORw0KGgoAAAANSUhEUgAAAAoAAAAKCAYAAACNMs+9AAAAFUlEQVR42mNk+M9Qz0AEYBxVSF+FAAhKDveksOjmAAAAAElFTkSuQmCC"),

Options: qdrant.NewValueMap(map[string]any{

"cohere-api-key": "<YOUR_COHERE_API_KEY>",

"output_dimension": 512,

}),

}),

},

},

})

请注意,Cohere embed-v4.0 模型不支持将图像作为 URL 传递。您需要提供 base64 编码的图像作为数据 URL。

在查询时,您可以通过在模型名称前加上 cohere/ 并在 options 对象中提供您的 Cohere API 密钥来使用相同的模型。此示例再次使用 Cohere 特定的 API output_dimension 参数将维度减少到 512。

POST /collections/{collection_name}/points/query

{

"query": {

"text": "a green square",

"model": "cohere/embed-v4.0",

"options": {

"cohere-api-key": "<YOUR_COHERE_API_KEY>",

"output_dimension": 512

}

}

}

from qdrant_client import QdrantClient, models

client = QdrantClient(

url="https://xyz-example.qdrant.io:6333",

api_key="<your-api-key>",

cloud_inference=True

)

client.query_points(

collection_name="{collection_name}",

query=models.Document(

text="a green square",

model="cohere/embed-v4.0",

options={

"cohere-api-key": "<your_cohere_api_key>",

"output_dimension": 512

}

)

)

import { QdrantClient } from "@qdrant/js-client-rest";

const client = new QdrantClient({ host: "localhost", port: 6333 });

client.query("{collection_name}", {

query: {

text: 'a green square',

model: 'cohere/embed-v4.0',

options: {

'cohere-api-key': '<your_cohere_api_key>',

output_dimension: 512,

},

},

});

use qdrant_client::{

Qdrant, QdrantError,

qdrant::{Document, Query, QueryPointsBuilder, Value},

};

use std::collections::HashMap;

let client = Qdrant::from_url("https://:6333").build().unwrap();

let mut options = HashMap::<String, Value>::new();

options.insert("cohere-api-key".to_string(), "<YOUR_COHERE_API_KEY>".into());

options.insert("output_dimension".to_string(), 512.into());

client

.query(

QueryPointsBuilder::new("{collection_name}")

.query(Query::new_nearest(Document {

text: "a green square".into(),

model: "cohere/embed-v4.0".into(),

options,

}))

.build(),

)

.await?;

import static io.qdrant.client.QueryFactory.nearest;

import static io.qdrant.client.ValueFactory.value;

import io.qdrant.client.QdrantClient;

import io.qdrant.client.QdrantGrpcClient;

import io.qdrant.client.grpc.Points.Document;

import java.util.Map;

QdrantClient client =

new QdrantClient(

QdrantGrpcClient.newBuilder("xyz-example.qdrant.io", 6334, true)

.withApiKey("<your-api-key")

.build());

client

.queryAsync(

Points.QueryPoints.newBuilder()

.setCollectionName("{collection_name}")

.setQuery(

nearest(

Document.newBuilder()

.setModel("cohere/embed-v4.0")

.setText("a green square")

.putAllOptions(

Map.of(

"cohere-api-key",

value("<YOUR_COHERE_API_KEY>"),

"output_dimension",

value(512)))

.build()))

.build())

.get();

using Qdrant.Client;

using Qdrant.Client.Grpc;

var client = new QdrantClient(

host: "xyz-example.qdrant.io",

port: 6334,

https: true,

apiKey: "<your-api-key>"

);

await client.QueryAsync(

collectionName: "{collection_name}",

query: new Document()

{

Model = "cohere/embed-v4.0",

Text = "a green square",

Options = { ["cohere-api-key"] = "<YOUR_COHERE_API_KEY>", ["output_dimension"] = 512 },

}

);

import (

"context"

"time"

"github.com/qdrant/go-client/qdrant"

)

client, err := qdrant.NewClient(&qdrant.Config{

Host: "xyz-example.qdrant.io",

Port: 6334,

APIKey: "<paste-your-api-key-here>",

UseTLS: true,

})

client.Query(ctx, &qdrant.QueryPoints{

CollectionName: "{collection_name}",

Query: qdrant.NewQueryNearest(

qdrant.NewVectorInputDocument(&qdrant.Document{

Text: "a green square",

Model: "cohere/embed-v4.0",

Options: qdrant.NewValueMap(map[string]any{

"cohere-api-key": "<YOUR_COHERE_API_KEY>",

"output_dimension": 512,

}),

}),

),

})

请注意,由于 Qdrant 不存储或缓存您的 Cohere API 密钥,因此您需要为每个推理请求提供它。

Jina AI

当您在模型名称前加上 jinaai/ 时,嵌入请求会自动路由到 Jina AI 嵌入 API。

例如,在摄取数据时使用 Jina AI 的多模态 jina-clip-v2 模型,在模型名称前加上 jinaai/ 并在 options 对象中提供您的 Jina AI API 密钥。此示例使用 Jina AI 特定的 API dimensions 参数将维度减少到 512。

PUT /collections/{collection_name}/points?wait=true

{

"points": [

{

"id": 1,

"vector": {

"image": "https://qdrant.org.cn/example.png",

"model": "jinaai/jina-clip-v2",

"options": {

"jina-api-key": "<YOUR_JINAAI_API_KEY>",

"dimensions": 512

}

}

}

]

}

from qdrant_client import QdrantClient, models

client = QdrantClient(

url="https://xyz-example.qdrant.io:6333",

api_key="<your-api-key>",

cloud_inference=True

)

client.upsert(

collection_name="{collection_name}",

points=[

models.PointStruct(

id=1,

vector=models.Image(

image="https://qdrant.org.cn/example.png",

model="jinaai/jina-clip-v2",

options={

"jina-api-key": "<your_jinaai_api_key>",

"dimensions": 512

}

)

)

]

)

import { QdrantClient } from "@qdrant/js-client-rest";

const client = new QdrantClient({ host: "localhost", port: 6333 });

client.upsert("{collection_name}", {

points: [

{

id: 1,

vector: {

image: 'https://qdrant.org.cn/example.png',

model: 'jinaai/jina-clip-v2',

options: {

'jina-api-key': '<your_jinaai_api_key>',

dimensions: 512,

},

},

},

],

});

use qdrant_client::{

Payload, Qdrant, QdrantError,

qdrant::{Image, PointStruct, UpsertPointsBuilder},

};

use std::collections::HashMap;

let client = Qdrant::from_url("<your-qdrant-url>").build()?;

let mut options = HashMap::new();

options.insert("jina-api-key".to_string(), "<YOUR_JINAAI_API_KEY>".into());

options.insert("dimensions".to_string(), 512.into());

client

.upsert_points(UpsertPointsBuilder::new("{collection_name}",

vec![

PointStruct::new(1,

Image {

image: Some("https://qdrant.org.cn/example.png".into()),

model: "jinaai/jina-clip-v2".into(),

options,

},

Payload::default())

]).wait(true))

.await?;

import static io.qdrant.client.PointIdFactory.id;

import static io.qdrant.client.ValueFactory.value;

import static io.qdrant.client.VectorsFactory.vectors;

import io.qdrant.client.QdrantClient;

import io.qdrant.client.QdrantGrpcClient;

import io.qdrant.client.grpc.Points.Image;

import io.qdrant.client.grpc.Points.PointStruct;

import java.util.List;

import java.util.Map;

QdrantClient client =

new QdrantClient(

QdrantGrpcClient.newBuilder("xyz-example.qdrant.io", 6334, true)

.withApiKey("<your-api-key")

.build());

client

.upsertAsync(

"{collection_name}",

List.of(

PointStruct.newBuilder()

.setId(id(1))

.setVectors(

vectors(

Image.newBuilder()

.setModel("jinaai/jina-clip-v2")

.setImage(value("https://qdrant.org.cn/example.png"))

.putAllOptions(

Map.of(

"jina-api-key",

value("<YOUR_JINAAI_API_KEY>"),

"dimensions",

value(512)))

.build()))

.build()))

.get();

using Qdrant.Client;

using Qdrant.Client.Grpc;

var client = new QdrantClient(

host: "xyz-example.qdrant.io",

port: 6334,

https: true,

apiKey: "<your-api-key>"

);

await client.UpsertAsync(

collectionName: "{collection_name}",

points: new List<PointStruct>

{

new()

{

Id = 1,

Vectors = new Document()

{

Model = "jinaai/jina-clip-v2",

Text = "Mission to Mars",

Options = { ["jina-api-key"] = "<YOUR_JINAAI_API_KEY>", ["dimensions"] = 512 },

},

},

}

);

import (

"context"

"time"

"github.com/qdrant/go-client/qdrant"

)

client, err := qdrant.NewClient(&qdrant.Config{

Host: "xyz-example.qdrant.io",

Port: 6334,

APIKey: "<paste-your-api-key-here>",

UseTLS: true,

})

client.Upsert(ctx, &qdrant.UpsertPoints{

CollectionName: "{collection_name}",

Points: []*qdrant.PointStruct{

{

Id: qdrant.NewIDNum(uint64(1)),

Vectors: qdrant.NewVectorsImage(&qdrant.Image{

Model: "jinaai/jina-clip-v2",

Image: qdrant.NewValueString("https://qdrant.org.cn/example.png"),

Options: qdrant.NewValueMap(map[string]any{

"jina-api-key": "<YOUR_JINAAI_API_KEY>",

"dimensions": 512,

}),

}),

},

},

})

在查询时,您可以通过在模型名称前加上 jinaai/ 并在 options 对象中提供您的 Jina AI API 密钥来使用相同的模型。此示例再次使用 Jina AI 特定的 API dimensions 参数将维度减少到 512。

POST /collections/{collection_name}/points/query

{

"query": {

"text": "Mission to Mars",

"model": "jinaai/jina-clip-v2",

"options": {

"jina-api-key": "<YOUR_JINAAI_API_KEY>",

"dimensions": 512

}

}

}

from qdrant_client import QdrantClient, models

client = QdrantClient(

url="https://xyz-example.qdrant.io:6333",

api_key="<your-api-key>",

cloud_inference=True

)

client.query_points(

collection_name="{collection_name}",

query=models.Document(

text="Mission to Mars",

model="jinaai/jina-clip-v2",

options={

"jina-api-key": "<your_jinaai_api_key>",

"dimensions": 512

}

)

)

import { QdrantClient } from "@qdrant/js-client-rest";

const client = new QdrantClient({ host: "localhost", port: 6333 });

client.query("{collection_name}", {

query: {

text: 'Mission to Mars',

model: 'jinaai/jina-clip-v2',

options: {

'jina-api-key': '<your_jinaai_api_key>',

dimensions: 512,

},

},

});

use qdrant_client::{

Qdrant, QdrantError,

qdrant::{Document, Query, QueryPointsBuilder, Value},

};

use std::collections::HashMap;

let client = Qdrant::from_url("<your-qdrant-url>").build().unwrap();

let mut options = HashMap::<String, Value>::new();

options.insert("jina-api-key".to_string(), "<YOUR_JINAAI_API_KEY>".into());

options.insert("dimensions".to_string(), 512.into());

client

.query(

QueryPointsBuilder::new("{collection_name}")

.query(Query::new_nearest(Document {

text: "Mission to Mars".into(),

model: "jinaai/jina-clip-v2".into(),

options,

}))

.build(),

)

.await?;

import static io.qdrant.client.QueryFactory.nearest;

import static io.qdrant.client.ValueFactory.value;

import io.qdrant.client.QdrantClient;

import io.qdrant.client.QdrantGrpcClient;

import io.qdrant.client.grpc.Points.Document;

import java.util.Map;

QdrantClient client =

new QdrantClient(

QdrantGrpcClient.newBuilder("xyz-example.qdrant.io", 6334, true)

.withApiKey("<your-api-key")

.build());

client

.queryAsync(

Points.QueryPoints.newBuilder()

.setCollectionName("{collection_name}")

.setQuery(

nearest(

Document.newBuilder()

.setModel("jinaai/jina-clip-v2")

.setText("Mission to Mars")

.putAllOptions(

Map.of(

"jina-api-key",

value("<YOUR_JINAAI_API_KEY>"),

"dimensions",

value(512)))

.build()))

.build())

.get();

using Qdrant.Client;

using Qdrant.Client.Grpc;

var client = new QdrantClient(

host: "xyz-example.qdrant.io",

port: 6334,

https: true,

apiKey: "<your-api-key>"

);

await client.QueryAsync(

collectionName: "{collection_name}",

query: new Document()

{

Model = "jinaai/jina-clip-v2",

Text = "Mission to Mars",

Options = { ["jina-api-key"] = "<YOUR_JINAAI_API_KEY>", ["dimensions"] = 512 },

}

);

import (

"context"

"time"

"github.com/qdrant/go-client/qdrant"

)

client, err := qdrant.NewClient(&qdrant.Config{

Host: "xyz-example.qdrant.io",

Port: 6334,

APIKey: "<paste-your-api-key-here>",

UseTLS: true,

})

client.Query(ctx, &qdrant.QueryPoints{

CollectionName: "{collection_name}",

Query: qdrant.NewQueryNearest(

qdrant.NewVectorInputDocument(&qdrant.Document{

Text: "Mission to Mars",

Model: "jinaai/jina-clip-v2",

Options: qdrant.NewValueMap(map[string]any{

"jina-api-key": "<YOUR_JINAAI_API_KEY>",

"dimensions": 512,

}),

}),

),

})

请注意,由于 Qdrant 不存储或缓存您的 Jina AI API 密钥,因此您需要为每个推理请求提供它。

多次推理操作

您可以在单个请求中运行多个推理操作,即使模型托管在不同位置。此示例为一个点生成三个不同的命名向量:使用 Jina AI 托管的 jina-clip-v2 的图像嵌入,使用 Qdrant Cloud 托管的 all-minilm-l6-v2 的文本嵌入,以及使用 Qdrant 集群在本地执行的 bm25 模型的 BM25 嵌入。

PUT /collections/{collection_name}/points?wait=true

{

"points": [

{

"id": 1,

"vector": {

"image": {

"image": "https://qdrant.org.cn/example.png",

"model": "jinaai/jina-clip-v2",

"options": {

"jina-api-key": "<YOUR_JINAAI_API_KEY>",

"dimensions": 512

}

},

"text": {

"text": "Mars, the red planet",

"model": "sentence-transformers/all-minilm-l6-v2"

},

"bm25": {

"text": "Mars, the red planet",

"model": "qdrant/bm25"

}

}

}

]

}

from qdrant_client import QdrantClient, models

client = QdrantClient(

url="https://xyz-example.qdrant.io:6333",

api_key="<your-api-key>",

cloud_inference=True

)

client.upsert(

collection_name="{collection_name}",

points=[

models.PointStruct(

id=1,

vector={

"image": models.Image(

image="https://qdrant.org.cn/example.png",

model="jinaai/jina-clip-v2",

options={

"jina-api-key": "<your_jinaai_api_key>",

"dimensions": 512

},

),

"text": models.Document(

text="Mars, the red planet",

model="sentence-transformers/all-minilm-l6-v2",

),

"bm25": models.Document(

text="Mars, the red planet",

model="Qdrant/bm25",

),

},

)

],

)

import { QdrantClient } from "@qdrant/js-client-rest";

const client = new QdrantClient({ host: "localhost", port: 6333 });

client.upsert("{collection_name}", {

points: [

{

id: 1,

vector: {

image: {

image: 'https://qdrant.org.cn/example.png',

model: 'jinaai/jina-clip-v2',

options: {

'jina-api-key': '<your_jinaai_api_key>',

dimensions: 512,

},

},

text: {

text: 'Mars, the red planet',

model: 'sentence-transformers/all-minilm-l6-v2',

},

bm25: {

text: 'Mars, the red planet',

model: 'Qdrant/bm25',

},

},

},

],

});

use qdrant_client::{

Payload, Qdrant, QdrantError,

qdrant::{Document, PointStruct, UpsertPointsBuilder, Vectors},

};

use std::collections::HashMap;

let client = Qdrant::from_url("<your-qdrant-url>").build()?;

let mut jina_options = HashMap::new();

jina_options.insert("jina-api-key".to_string(), "<YOUR_JINAAI_API_KEY>".into());

jina_options.insert("dimensions".to_string(), 512.into());

client

.upsert_points(

UpsertPointsBuilder::new(

"{collection_name}",

vec![PointStruct::new(

1,

NamedVectors::default()

.add_vector(

"image",

Image {

image: Some("https://qdrant.org.cn/example.png".into()),

model: "jinaai/jina-clip-v2".into(),

options: jina_options,

},

)

.add_vector(

"text",

Document {

text: "Mars, the red planet".into(),

model: "sentence-transformers/all-minilm-l6-v2".into(),

..Default::default()

},

)

.add_vector(

"bm25",

Document {

text: "How to bake cookies?".into(),

model: "qdrant/bm25".into(),

..Default::default()

},

),

Payload::default(),

)],

)

.wait(true),

)

.await?;

import static io.qdrant.client.PointIdFactory.id;

import static io.qdrant.client.ValueFactory.value;

import static io.qdrant.client.VectorFactory.vector;

import static io.qdrant.client.VectorsFactory.namedVectors;

import io.qdrant.client.QdrantClient;

import io.qdrant.client.QdrantGrpcClient;

import io.qdrant.client.grpc.Points.Document;

import io.qdrant.client.grpc.Points.Image;

import io.qdrant.client.grpc.Points.PointStruct;

import java.util.List;

import java.util.Map;

QdrantClient client =

new QdrantClient(

QdrantGrpcClient.newBuilder("xyz-example.qdrant.io", 6334, true)

.withApiKey("<your-api-key")

.build());

client

.upsertAsync(

"{collection_name}",

List.of(

PointStruct.newBuilder()

.setId(id(1))

.setVectors(

namedVectors(

Map.of(

"image",

vector(

Image.newBuilder()

.setModel("jinaai/jina-clip-v2")

.setImage(value("https://qdrant.org.cn/example.png"))

.putAllOptions(

Map.of(

"jina-api-key",

value("<YOUR_JINAAI_API_KEY>"),

"dimensions",

value(512)))

.build()),

"text",

vector(

Document.newBuilder()

.setModel("sentence-transformers/all-minilm-l6-v2")

.setText("Mars, the red planet")

.build()),

"bm25",

vector(

Document.newBuilder()

.setModel("qdrant/bm25")

.setText("Mars, the red planet")

.build()))))

.build()))

.get();

using Qdrant.Client;

using Qdrant.Client.Grpc;

var client = new QdrantClient(

host: "xyz-example.qdrant.io", port: 6334, https: true, apiKey: "<your-api-key>");

await client.UpsertAsync(

collectionName: "{collection_name}",

points: new List<PointStruct>

{

new()

{

Id = 1,

Vectors = new Dictionary<string, Vector>

{

["image"] = new Image()

{

Model = "jinaai/jina-clip-v2",

Image_ = "https://qdrant.org.cn/example.png",

Options = { ["jina-api-key"] = "<YOUR_JINAAI_API_KEY>", ["dimensions"] = 512 },

},

["text"] = new Document()

{

Model = "sentence-transformers/all-minilm-l6-v2",

Text = "Mars, the red planet",

},

["bm25"] = new Document() { Model = "qdrant/bm25", Text = "Mars, the red planet" },

},

},

}

);

import (

"context"

"time"

"github.com/qdrant/go-client/qdrant"

)

client, err := qdrant.NewClient(&qdrant.Config{

Host: "xyz-example.qdrant.io",

Port: 6334,

APIKey: "<paste-your-api-key-here>",

UseTLS: true,

})

client.Upsert(ctx, &qdrant.UpsertPoints{

CollectionName: "{collection_name}",

Points: []*qdrant.PointStruct{

{

Id: qdrant.NewIDNum(uint64(1)),

Vectors: qdrant.NewVectorsMap(map[string]*qdrant.Vector{

"image": qdrant.NewVectorImage(&qdrant.Image{

Model: "jinaai/jina-clip-v2",

Image: qdrant.NewValueString("https://qdrant.org.cn/example.png"),

Options: qdrant.NewValueMap(map[string]any{

"jina-api-key": "<YOUR_JINAAI_API_KEY>",

"dimensions": 512,

}),

}),

"text": qdrant.NewVectorDocument(&qdrant.Document{

Model: "sentence-transformers/all-minilm-l6-v2",

Text: "Mars, the red planet",

}),

"my-bm25-vector": qdrant.NewVectorDocument(&qdrant.Document{

Model: "qdrant/bm25",

Text: "Recipe for baking chocolate chip cookies",

}),

}),

},

},

})