配置多租户

你应该创建多少个集合? 在大多数情况下,每个嵌入模型创建一个集合,并为不同的租户和用例进行基于载荷的分区。这种方法称为多租户。它对大多数用户来说是高效的,但需要额外的配置。本文档将向你展示如何进行设置。

你什么时候应该创建多个集合? 当你的用户数量有限并且需要隔离时。这种方法很灵活,但成本可能更高,因为创建大量集合可能会导致资源开销。此外,你需要确保它们不会以任何方式相互影响,包括性能方面。

按载荷分区

当一个实例在多个用户之间共享时,你可能需要按用户分区向量。这样做是为了让每个用户只能访问自己的向量,而不能看到其他用户的向量。

PUT /collections/{collection_name}/points

{

"points": [

{

"id": 1,

"payload": {"group_id": "user_1"},

"vector": [0.9, 0.1, 0.1]

},

{

"id": 2,

"payload": {"group_id": "user_1"},

"vector": [0.1, 0.9, 0.1]

},

{

"id": 3,

"payload": {"group_id": "user_2"},

"vector": [0.1, 0.1, 0.9]

},

]

}

client.upsert(

collection_name="{collection_name}",

points=[

models.PointStruct(

id=1,

payload={"group_id": "user_1"},

vector=[0.9, 0.1, 0.1],

),

models.PointStruct(

id=2,

payload={"group_id": "user_1"},

vector=[0.1, 0.9, 0.1],

),

models.PointStruct(

id=3,

payload={"group_id": "user_2"},

vector=[0.1, 0.1, 0.9],

),

],

)

import { QdrantClient } from "@qdrant/js-client-rest";

const client = new QdrantClient({ host: "localhost", port: 6333 });

client.upsert("{collection_name}", {

points: [

{

id: 1,

payload: { group_id: "user_1" },

vector: [0.9, 0.1, 0.1],

},

{

id: 2,

payload: { group_id: "user_1" },

vector: [0.1, 0.9, 0.1],

},

{

id: 3,

payload: { group_id: "user_2" },

vector: [0.1, 0.1, 0.9],

},

],

});

use qdrant_client::qdrant::{PointStruct, UpsertPointsBuilder};

use qdrant_client::Qdrant;

let client = Qdrant::from_url("https://:6334").build()?;

client

.upsert_points(UpsertPointsBuilder::new(

"{collection_name}",

vec![

PointStruct::new(1, vec![0.9, 0.1, 0.1], [("group_id", "user_1".into())]),

PointStruct::new(2, vec![0.1, 0.9, 0.1], [("group_id", "user_1".into())]),

PointStruct::new(3, vec![0.1, 0.1, 0.9], [("group_id", "user_2".into())]),

],

))

.await?;

import java.util.List;

import java.util.Map;

import io.qdrant.client.QdrantClient;

import io.qdrant.client.QdrantGrpcClient;

import io.qdrant.client.grpc.Points.PointStruct;

QdrantClient client =

new QdrantClient(QdrantGrpcClient.newBuilder("localhost", 6334, false).build());

client

.upsertAsync(

"{collection_name}",

List.of(

PointStruct.newBuilder()

.setId(id(1))

.setVectors(vectors(0.9f, 0.1f, 0.1f))

.putAllPayload(Map.of("group_id", value("user_1")))

.build(),

PointStruct.newBuilder()

.setId(id(2))

.setVectors(vectors(0.1f, 0.9f, 0.1f))

.putAllPayload(Map.of("group_id", value("user_1")))

.build(),

PointStruct.newBuilder()

.setId(id(3))

.setVectors(vectors(0.1f, 0.1f, 0.9f))

.putAllPayload(Map.of("group_id", value("user_2")))

.build()))

.get();

using Qdrant.Client;

using Qdrant.Client.Grpc;

var client = new QdrantClient("localhost", 6334);

await client.UpsertAsync(

collectionName: "{collection_name}",

points: new List<PointStruct>

{

new()

{

Id = 1,

Vectors = new[] { 0.9f, 0.1f, 0.1f },

Payload = { ["group_id"] = "user_1" }

},

new()

{

Id = 2,

Vectors = new[] { 0.1f, 0.9f, 0.1f },

Payload = { ["group_id"] = "user_1" }

},

new()

{

Id = 3,

Vectors = new[] { 0.1f, 0.1f, 0.9f },

Payload = { ["group_id"] = "user_2" }

}

}

);

import (

"context"

"github.com/qdrant/go-client/qdrant"

)

client, err := qdrant.NewClient(&qdrant.Config{

Host: "localhost",

Port: 6334,

})

client.Upsert(context.Background(), &qdrant.UpsertPoints{

CollectionName: "{collection_name}",

Points: []*qdrant.PointStruct{

{

Id: qdrant.NewIDNum(1),

Vectors: qdrant.NewVectors(0.9, 0.1, 0.1),

Payload: qdrant.NewValueMap(map[string]any{"group_id": "user_1"}),

},

{

Id: qdrant.NewIDNum(2),

Vectors: qdrant.NewVectors(0.1, 0.9, 0.1),

Payload: qdrant.NewValueMap(map[string]any{"group_id": "user_1"}),

},

{

Id: qdrant.NewIDNum(3),

Vectors: qdrant.NewVectors(0.1, 0.1, 0.9),

Payload: qdrant.NewValueMap(map[string]any{"group_id": "user_2"}),

},

},

})

- 使用过滤器和

group_id来过滤每个用户的向量。

POST /collections/{collection_name}/points/query

{

"query": [0.1, 0.1, 0.9],

"filter": {

"must": [

{

"key": "group_id",

"match": {

"value": "user_1"

}

}

]

},

"limit": 10

}

from qdrant_client import QdrantClient, models

client = QdrantClient(url="https://:6333")

client.query_points(

collection_name="{collection_name}",

query=[0.1, 0.1, 0.9],

query_filter=models.Filter(

must=[

models.FieldCondition(

key="group_id",

match=models.MatchValue(

value="user_1",

),

)

]

),

limit=10,

)

import { QdrantClient } from "@qdrant/js-client-rest";

const client = new QdrantClient({ host: "localhost", port: 6333 });

client.query("{collection_name}", {

query: [0.1, 0.1, 0.9],

filter: {

must: [{ key: "group_id", match: { value: "user_1" } }],

},

limit: 10,

});

use qdrant_client::qdrant::{Condition, Filter, QueryPointsBuilder};

use qdrant_client::Qdrant;

let client = Qdrant::from_url("https://:6334").build()?;

client

.query(

QueryPointsBuilder::new("{collection_name}")

.query(vec![0.1, 0.1, 0.9])

.limit(10)

.filter(Filter::must([Condition::matches(

"group_id",

"user_1".to_string(),

)])),

)

.await?;

import java.util.List;

import io.qdrant.client.QdrantClient;

import io.qdrant.client.QdrantGrpcClient;

import io.qdrant.client.grpc.Common.Filter;

import io.qdrant.client.grpc.Points.QueryPoints;

import static io.qdrant.client.QueryFactory.nearest;

import static io.qdrant.client.ConditionFactory.matchKeyword;

QdrantClient client =

new QdrantClient(QdrantGrpcClient.newBuilder("localhost", 6334, false).build());

client.queryAsync(

QueryPoints.newBuilder()

.setCollectionName("{collection_name}")

.setFilter(

Filter.newBuilder().addMust(matchKeyword("group_id", "user_1")).build())

.setQuery(nearest(0.1f, 0.1f, 0.9f))

.setLimit(10)

.build())

.get();

using Qdrant.Client;

using Qdrant.Client.Grpc;

using static Qdrant.Client.Grpc.Conditions;

var client = new QdrantClient("localhost", 6334);

await client.QueryAsync(

collectionName: "{collection_name}",

query: new float[] { 0.1f, 0.1f, 0.9f },

filter: MatchKeyword("group_id", "user_1"),

limit: 10

);

import (

"context"

"github.com/qdrant/go-client/qdrant"

)

client, err := qdrant.NewClient(&qdrant.Config{

Host: "localhost",

Port: 6334,

})

client.Query(context.Background(), &qdrant.QueryPoints{

CollectionName: "{collection_name}",

Query: qdrant.NewQuery(0.1, 0.1, 0.9),

Filter: &qdrant.Filter{

Must: []*qdrant.Condition{

qdrant.NewMatch("group_id", "user_1"),

},

},

})

校准性能

在这种情况下,索引速度可能会成为瓶颈,因为每个用户的向量都将索引到同一个集合中。为了避免这个瓶颈,考虑绕过整个集合的全局向量索引的构建,而只为单个组构建它。

通过采用这种策略,Qdrant 将独立地为每个用户索引向量,显著加快了进程。

要实现这种方法,你应该

- 将 HNSW 配置中的

payload_m设置为非零值,例如 16。 - 将 hnsw 配置中的

m设置为 0。这将禁用为整个集合构建全局索引。

PUT /collections/{collection_name}

{

"vectors": {

"size": 768,

"distance": "Cosine"

},

"hnsw_config": {

"payload_m": 16,

"m": 0

}

}

from qdrant_client import QdrantClient, models

client = QdrantClient(url="https://:6333")

client.create_collection(

collection_name="{collection_name}",

vectors_config=models.VectorParams(size=768, distance=models.Distance.COSINE),

hnsw_config=models.HnswConfigDiff(

payload_m=16,

m=0,

),

)

import { QdrantClient } from "@qdrant/js-client-rest";

const client = new QdrantClient({ host: "localhost", port: 6333 });

client.createCollection("{collection_name}", {

vectors: {

size: 768,

distance: "Cosine",

},

hnsw_config: {

payload_m: 16,

m: 0,

},

});

use qdrant_client::qdrant::{

CreateCollectionBuilder, Distance, HnswConfigDiffBuilder, VectorParamsBuilder,

};

use qdrant_client::Qdrant;

let client = Qdrant::from_url("https://:6334").build()?;

client

.create_collection(

CreateCollectionBuilder::new("{collection_name}")

.vectors_config(VectorParamsBuilder::new(768, Distance::Cosine))

.hnsw_config(HnswConfigDiffBuilder::default().payload_m(16).m(0)),

)

.await?;

import io.qdrant.client.QdrantClient;

import io.qdrant.client.QdrantGrpcClient;

import io.qdrant.client.grpc.Collections.CreateCollection;

import io.qdrant.client.grpc.Collections.Distance;

import io.qdrant.client.grpc.Collections.HnswConfigDiff;

import io.qdrant.client.grpc.Collections.VectorParams;

import io.qdrant.client.grpc.Collections.VectorsConfig;

QdrantClient client =

new QdrantClient(QdrantGrpcClient.newBuilder("localhost", 6334, false).build());

client

.createCollectionAsync(

CreateCollection.newBuilder()

.setCollectionName("{collection_name}")

.setVectorsConfig(

VectorsConfig.newBuilder()

.setParams(

VectorParams.newBuilder()

.setSize(768)

.setDistance(Distance.Cosine)

.build())

.build())

.setHnswConfig(HnswConfigDiff.newBuilder().setPayloadM(16).setM(0).build())

.build())

.get();

using Qdrant.Client;

using Qdrant.Client.Grpc;

var client = new QdrantClient("localhost", 6334);

await client.CreateCollectionAsync(

collectionName: "{collection_name}",

vectorsConfig: new VectorParams { Size = 768, Distance = Distance.Cosine },

hnswConfig: new HnswConfigDiff { PayloadM = 16, M = 0 }

);

import (

"context"

"github.com/qdrant/go-client/qdrant"

)

client, err := qdrant.NewClient(&qdrant.Config{

Host: "localhost",

Port: 6334,

})

client.CreateCollection(context.Background(), &qdrant.CreateCollection{

CollectionName: "{collection_name}",

VectorsConfig: qdrant.NewVectorsConfig(&qdrant.VectorParams{

Size: 768,

Distance: qdrant.Distance_Cosine,

}),

HnswConfig: &qdrant.HnswConfigDiff{

PayloadM: qdrant.PtrOf(uint64(16)),

M: qdrant.PtrOf(uint64(0)),

},

})

- 为

group_id字段创建关键字载荷索引。

PUT /collections/{collection_name}/index

{

"field_name": "group_id",

"field_schema": {

"type": "keyword",

"is_tenant": true

}

}

client.create_payload_index(

collection_name="{collection_name}",

field_name="group_id",

field_schema=models.KeywordIndexParams(

type="keyword",

is_tenant=True,

),

)

client.createPayloadIndex("{collection_name}", {

field_name: "group_id",

field_schema: {

type: "keyword",

is_tenant: true,

},

});

use qdrant_client::qdrant::{

CreateFieldIndexCollectionBuilder,

KeywordIndexParamsBuilder,

FieldType

};

use qdrant_client::{Qdrant, QdrantError};

let client = Qdrant::from_url("https://:6334").build()?;

client.create_field_index(

CreateFieldIndexCollectionBuilder::new(

"{collection_name}",

"group_id",

FieldType::Keyword,

).field_index_params(

KeywordIndexParamsBuilder::default()

.is_tenant(true)

)

).await?;

import io.qdrant.client.QdrantClient;

import io.qdrant.client.QdrantGrpcClient;

import io.qdrant.client.grpc.Collections.PayloadIndexParams;

import io.qdrant.client.grpc.Collections.PayloadSchemaType;

import io.qdrant.client.grpc.Collections.KeywordIndexParams;

QdrantClient client =

new QdrantClient(QdrantGrpcClient.newBuilder("localhost", 6334, false).build());

client

.createPayloadIndexAsync(

"{collection_name}",

"group_id",

PayloadSchemaType.Keyword,

PayloadIndexParams.newBuilder()

.setKeywordIndexParams(

KeywordIndexParams.newBuilder()

.setIsTenant(true)

.build())

.build(),

null,

null,

null)

.get();

using Qdrant.Client;

var client = new QdrantClient("localhost", 6334);

await client.CreatePayloadIndexAsync(

collectionName: "{collection_name}",

fieldName: "group_id",

schemaType: PayloadSchemaType.Keyword,

indexParams: new PayloadIndexParams

{

KeywordIndexParams = new KeywordIndexParams

{

IsTenant = true

}

}

);

import (

"context"

"github.com/qdrant/go-client/qdrant"

)

client, err := qdrant.NewClient(&qdrant.Config{

Host: "localhost",

Port: 6334,

})

client.CreateFieldIndex(context.Background(), &qdrant.CreateFieldIndexCollection{

CollectionName: "{collection_name}",

FieldName: "group_id",

FieldType: qdrant.FieldType_FieldTypeKeyword.Enum(),

FieldIndexParams: qdrant.NewPayloadIndexParams(

&qdrant.KeywordIndexParams{

IsTenant: qdrant.PtrOf(true),

}),

})

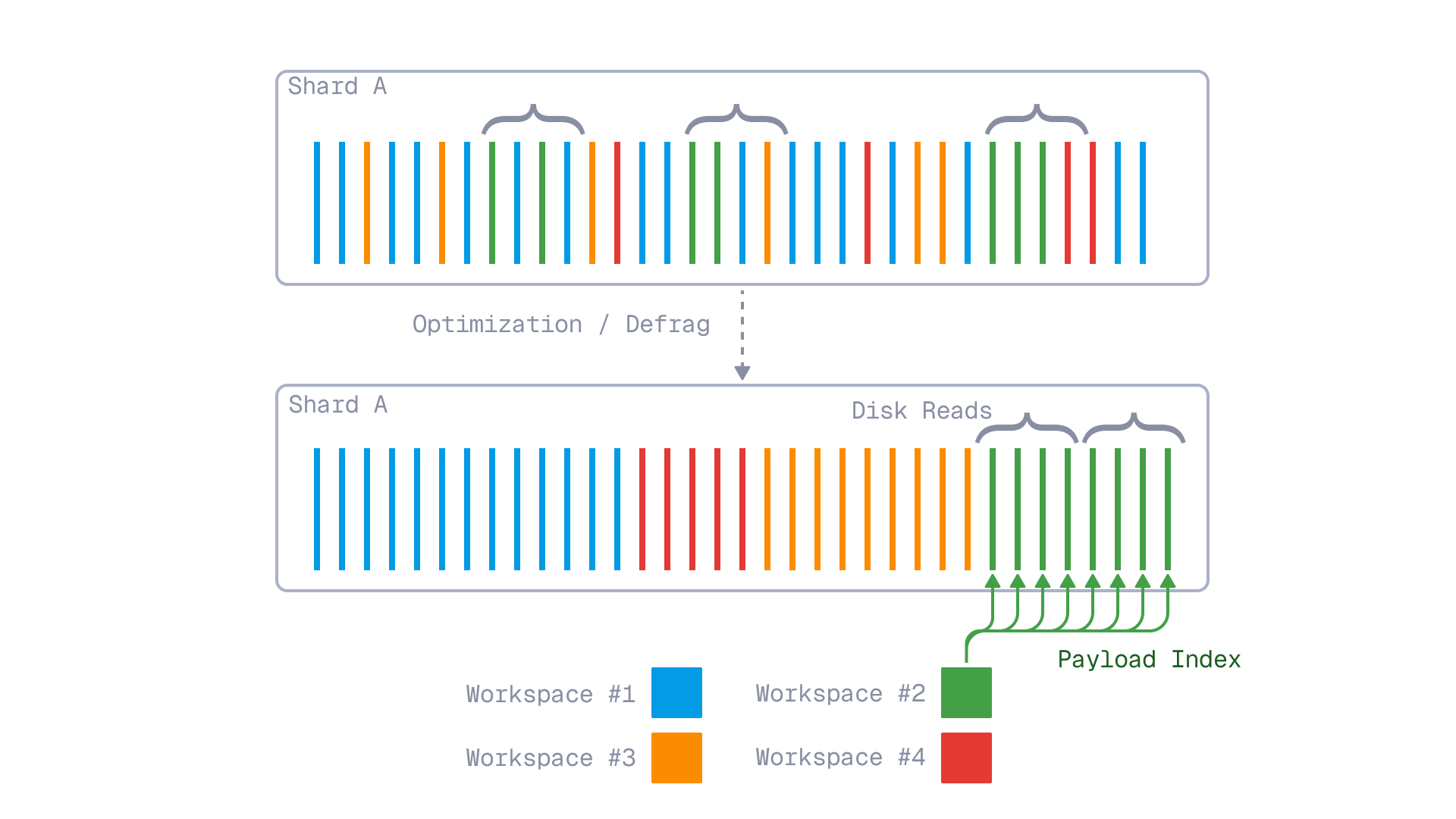

is_tenant=true 参数是可选的,但指定它可以为存储提供有关集合将使用的使用模式的附加信息。指定后,存储结构将以将同一租户的向量共置在一起的方式组织,这可以通过在查询期间利用顺序读取来显著提高性能。

如果使用 is_tenant=true,则按租户 ID 对租户进行分组

限制

这种方法的一个缺点是,全局请求(没有 group_id 过滤器)会变慢,因为它们需要扫描所有组以识别最近的邻居。

分层多租户

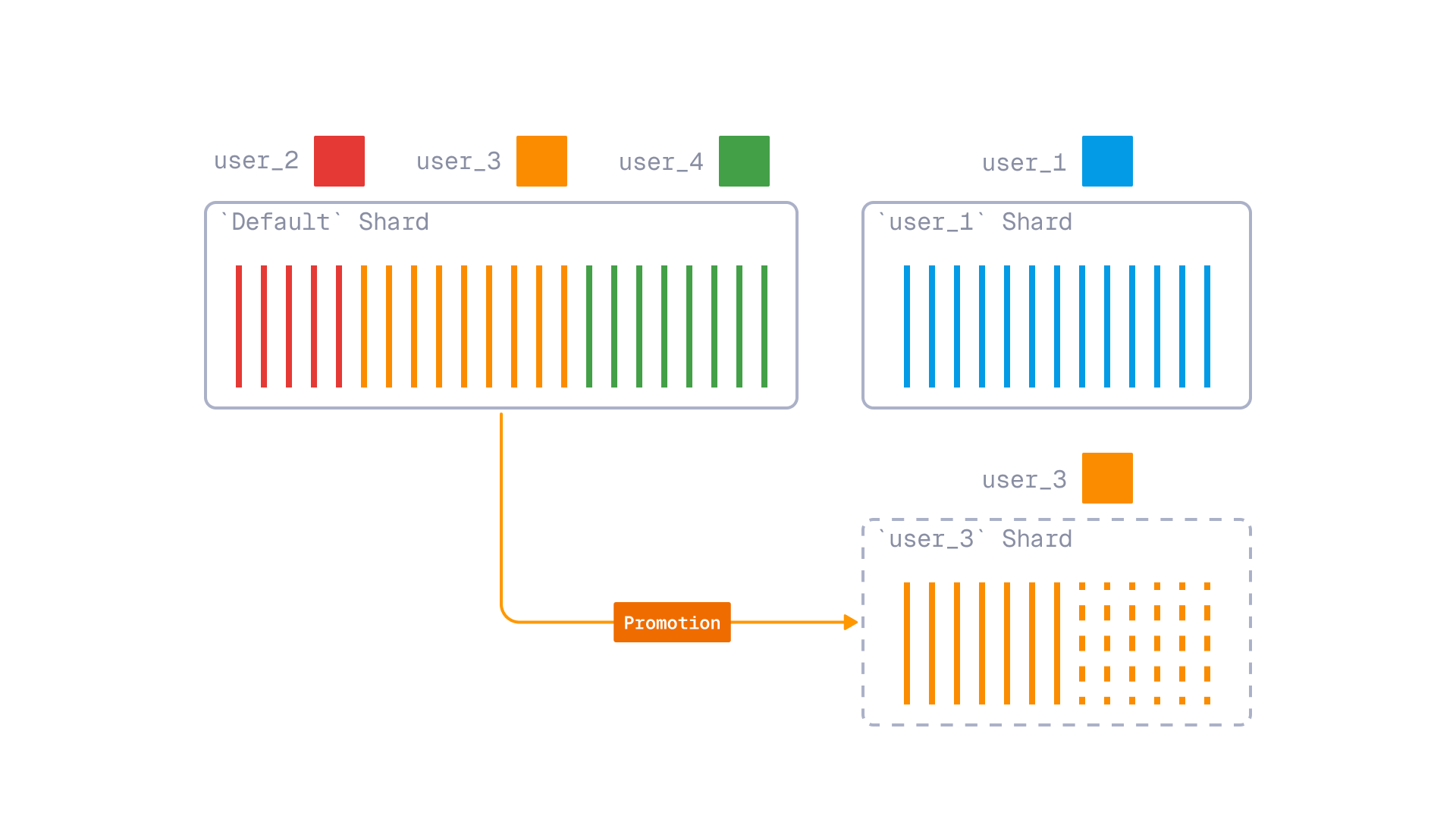

在某些实际应用中,租户可能分布不均。例如,SaaS 应用程序可能有少量大客户和许多小客户。大租户可能需要扩展资源和隔离,而小租户不应产生太多开销。

解决此问题的一种方法是引入应用程序级逻辑,根据租户的大小或资源要求将租户分离到不同的集合中。然而,这种方法有一个缺点:我们可能无法提前知道哪些租户会变大,哪些租户会保持较小。此外,应用程序级逻辑增加了系统的复杂性,并且需要额外的真实来源来管理租户放置。

为了解决这个问题,在 v1.16.0 中,Qdrant 提供了一个内置的分层多租户机制。

通过分层多租户,你可以在单个集合中实现两个级别的租户隔离,将小租户保留在共享分片中,同时将大租户隔离到其自己的专用分片中。Qdrant 中有 3 个组件允许你实现分层多租户

- 用户定义分片 允许你在集合中创建命名分片。它允许将大租户隔离到其自己的分片中。

- 回退分片 - 一种特殊的路由机制,允许将请求路由到专用分片(如果存在)或共享回退分片。它允许统一请求,而无需知道租户是专用还是共享。

- 租户升级 - 一种机制,允许在租户足够大时将其从共享回退分片移动到其自己的专用分片。此过程基于 Qdrant 的内部分片传输机制,这使得升级对应用程序完全透明。在升级过程中支持读写请求。

带有租户升级的分层多租户

配置分层多租户

要利用分层多租户,你需要创建一个带有用户定义(又名 custom)分片的集合,并在其中创建一个回退分片。

PUT /collections/{collection_name}

{

"shard_number": 1,

"sharding_method": "custom"

// ... other collection parameters

}

from qdrant_client import QdrantClient, models

client = QdrantClient(url="https://:6333")

client.create_collection(

collection_name="{collection_name}",

shard_number=1,

sharding_method=models.ShardingMethod.CUSTOM,

# ... other collection parameters

)

import { QdrantClient } from "@qdrant/js-client-rest";

const client = new QdrantClient({ host: "localhost", port: 6333 });

client.createCollection("{collection_name}", {

shard_number: 1,

sharding_method: "custom",

// ... other collection parameters

});

use qdrant_client::qdrant::{

CreateCollectionBuilder, Distance, ShardingMethod, VectorParamsBuilder,

};

use qdrant_client::Qdrant;

let client = Qdrant::from_url("https://:6334").build()?;

client

.create_collection(

CreateCollectionBuilder::new("{collection_name}")

.vectors_config(VectorParamsBuilder::new(300, Distance::Cosine))

.shard_number(1)

.sharding_method(ShardingMethod::Custom.into()),

)

.await?;

import static io.qdrant.client.ShardKeyFactory.shardKey;

import io.qdrant.client.QdrantClient;

import io.qdrant.client.QdrantGrpcClient;

import io.qdrant.client.grpc.Collections.CreateCollection;

import io.qdrant.client.grpc.Collections.ShardingMethod;

QdrantClient client =

new QdrantClient(QdrantGrpcClient.newBuilder("localhost", 6334, false).build());

client

.createCollectionAsync(

CreateCollection.newBuilder()

.setCollectionName("{collection_name}")

// ... other collection parameters

.setShardNumber(1)

.setShardingMethod(ShardingMethod.Custom)

.build())

.get();

using Qdrant.Client;

using Qdrant.Client.Grpc;

var client = new QdrantClient("localhost", 6334);

await client.CreateCollectionAsync(

collectionName: "{collection_name}",

// ... other collection parameters

shardNumber: 1,

shardingMethod: ShardingMethod.Custom

);

import (

"context"

"github.com/qdrant/go-client/qdrant"

)

client, err := qdrant.NewClient(&qdrant.Config{

Host: "localhost",

Port: 6334,

})

client.CreateCollection(context.Background(), &qdrant.CreateCollection{

CollectionName: "{collection_name}",

// ... other collection parameters

ShardNumber: qdrant.PtrOf(uint32(1)),

ShardingMethod: qdrant.ShardingMethod_Custom.Enum(),

})

首先创建一个回退分片,该分片将用于存储小租户。我们将其命名为 default。

PUT /collections/{collection_name}/shards

{

"shard_key": "default"

}

from qdrant_client import QdrantClient, models

client = QdrantClient(url="https://:6333")

client.create_shard_key("{collection_name}", "default")

import { QdrantClient } from "@qdrant/js-client-rest";

const client = new QdrantClient({ host: "localhost", port: 6333 });

client.createShardKey("{collection_name}", {

shard_key: "default"

});

use qdrant_client::qdrant::{

CreateShardKeyBuilder, CreateShardKeyRequestBuilder

};

use qdrant_client::Qdrant;

let client = Qdrant::from_url("https://:6334").build()?;

client

.create_shard_key(

CreateShardKeyRequestBuilder::new("{collection_name}")

.request(CreateShardKeyBuilder::default().shard_key("default".to_string())),

)

.await?;

import static io.qdrant.client.ShardKeyFactory.shardKey;

import io.qdrant.client.QdrantClient;

import io.qdrant.client.QdrantGrpcClient;

import io.qdrant.client.grpc.Collections.CreateShardKey;

import io.qdrant.client.grpc.Collections.CreateShardKeyRequest;

QdrantClient client =

new QdrantClient(QdrantGrpcClient.newBuilder("localhost", 6334, false).build());

client.createShardKeyAsync(CreateShardKeyRequest.newBuilder()

.setCollectionName("{collection_name}")

.setRequest(CreateShardKey.newBuilder()

.setShardKey(shardKey("default"))

.build())

.build()).get();

using Qdrant.Client;

using Qdrant.Client.Grpc;

var client = new QdrantClient("localhost", 6334);

await client.CreateShardKeyAsync(

"{collection_name}",

new CreateShardKey { ShardKey = new ShardKey { Keyword = "default", } }

);

import (

"context"

"github.com/qdrant/go-client/qdrant"

)

client, err := qdrant.NewClient(&qdrant.Config{

Host: "localhost",

Port: 6334,

})

client.CreateShardKey(context.Background(), "{collection_name}", &qdrant.CreateShardKey{

ShardKey: qdrant.NewShardKey("default"),

})

由于集合将同时允许专用租户和共享租户,我们仍然需要按照上面按载荷分区部分中描述的方式为该集合配置基于载荷的租户。也就是说,我们需要为 group_id 字段创建一个带有 is_tenant=true 的载荷索引。

PUT /collections/{collection_name}/index

{

"field_name": "group_id",

"field_schema": {

"type": "keyword",

"is_tenant": true

}

}

client.create_payload_index(

collection_name="{collection_name}",

field_name="group_id",

field_schema=models.KeywordIndexParams(

type="keyword",

is_tenant=True,

),

)

client.createPayloadIndex("{collection_name}", {

field_name: "group_id",

field_schema: {

type: "keyword",

is_tenant: true,

},

});

use qdrant_client::qdrant::{

CreateFieldIndexCollectionBuilder,

KeywordIndexParamsBuilder,

FieldType

};

use qdrant_client::{Qdrant, QdrantError};

let client = Qdrant::from_url("https://:6334").build()?;

client.create_field_index(

CreateFieldIndexCollectionBuilder::new(

"{collection_name}",

"group_id",

FieldType::Keyword,

).field_index_params(

KeywordIndexParamsBuilder::default()

.is_tenant(true)

)

).await?;

import io.qdrant.client.QdrantClient;

import io.qdrant.client.QdrantGrpcClient;

import io.qdrant.client.grpc.Collections.PayloadIndexParams;

import io.qdrant.client.grpc.Collections.PayloadSchemaType;

import io.qdrant.client.grpc.Collections.KeywordIndexParams;

QdrantClient client =

new QdrantClient(QdrantGrpcClient.newBuilder("localhost", 6334, false).build());

client

.createPayloadIndexAsync(

"{collection_name}",

"group_id",

PayloadSchemaType.Keyword,

PayloadIndexParams.newBuilder()

.setKeywordIndexParams(

KeywordIndexParams.newBuilder()

.setIsTenant(true)

.build())

.build(),

null,

null,

null)

.get();

using Qdrant.Client;

var client = new QdrantClient("localhost", 6334);

await client.CreatePayloadIndexAsync(

collectionName: "{collection_name}",

fieldName: "group_id",

schemaType: PayloadSchemaType.Keyword,

indexParams: new PayloadIndexParams

{

KeywordIndexParams = new KeywordIndexParams

{

IsTenant = true

}

}

);

import (

"context"

"github.com/qdrant/go-client/qdrant"

)

client, err := qdrant.NewClient(&qdrant.Config{

Host: "localhost",

Port: 6334,

})

client.CreateFieldIndex(context.Background(), &qdrant.CreateFieldIndexCollection{

CollectionName: "{collection_name}",

FieldName: "group_id",

FieldType: qdrant.FieldType_FieldTypeKeyword.Enum(),

FieldIndexParams: qdrant.NewPayloadIndexParams(

&qdrant.KeywordIndexParams{

IsTenant: qdrant.PtrOf(true),

}),

})

查询分层多租户集合

现在我们可以开始将数据上传到集合中。与简单的基于载荷的多租户的一个重要区别是,现在我们需要在每个请求中指定分片键选择器以将请求路由到正确的分片。

分片键选择器将指定两个键

target分片 - 租户专用分片的名称(可能存在,也可能不存在)。fallback分片 - 共享回退分片的名称(在我们的例子中是default)。

PUT /collections/{collection_name}/points

{

"points": [

{

"id": 1,

"payload": {"group_id": "user_1"},

"vector": [0.9, 0.1, 0.1]

}

],

"shard_key": {

"fallback": "default",

"target": "user_1"

}

}

client.upsert(

collection_name="{collection_name}",

points=[

models.PointStruct(

id=1,

payload={"group_id": "user_1"},

vector=[0.9, 0.1, 0.1],

),

],

shard_key_selector=models.ShardKeyWithFallback(

target="user_1",

fallback="default"

)

)

import { QdrantClient } from "@qdrant/js-client-rest";

const client = new QdrantClient({ host: "localhost", port: 6333 });

client.upsert("{collection_name}", {

points: [

{

id: 1,

payload: { group_id: "user_1" },

vector: [0.9, 0.1, 0.1],

}

],

shard_key: {

target: "user_1",

fallback: "default"

}

});

use qdrant_client::qdrant::{PointStruct, UpsertPointsBuilder};

use qdrant_client::Qdrant;

let client = Qdrant::from_url("https://:6334").build()?;

let shard_key_selector = ShardKeySelectorBuilder::with_shard_key("user_1")

.fallback("default")

.build();

client

.upsert_points(

UpsertPointsBuilder::new(

"{collection_name}",

vec![

PointStruct::new(

1,

vec![0.9, 0.1, 0.1],

[("group_id", "user_1".into())]

),

],

)

.shard_key_selector(shard_key_selector),

)

.await?;

import java.util.List;

import java.util.Map;

import static io.qdrant.client.ShardKeyFactory.shardKey;

import io.qdrant.client.QdrantClient;

import io.qdrant.client.QdrantGrpcClient;

import io.qdrant.client.grpc.Points.PointStruct;

import io.qdrant.client.grpc.Points.ShardKeySelector;

QdrantClient client =

new QdrantClient(QdrantGrpcClient.newBuilder("localhost", 6334, false).build());

client

.upsertAsync(

UpsertPoints.newBuilder()

.setCollectionName("{collection_name}")

.addAllPoints(

List.of(

PointStruct.newBuilder()

.setId(id(1))

.setVectors(vectors(0.9f, 0.1f, 0.1f))

.putAllPayload(Map.of("group_id", value("user_1")))

.build()))

.setShardKeySelector(

ShardKeySelector.newBuilder()

.addShardKeys(shardKey("user_1"))

.setFallback(shardKey("default"))

.build())

.build())

.get();

using Qdrant.Client;

using Qdrant.Client.Grpc;

var client = new QdrantClient("localhost", 6334);

await client.UpsertAsync(

collectionName: "{collection_name}",

points: new List<PointStruct>

{

new()

{

Id = 1,

Vectors = new[] { 0.9f, 0.1f, 0.1f },

Payload = { ["group_id"] = "user_1" }

}

},

shardKeySelector: new ShardKeySelector {

ShardKeys = { new List<ShardKey> { "user_1" } },

Fallback = new ShardKey { Keyword = "default" }

}

);

import (

"context"

"github.com/qdrant/go-client/qdrant"

)

client, err := qdrant.NewClient(&qdrant.Config{

Host: "localhost",

Port: 6334,

})

client.Upsert(context.Background(), &qdrant.UpsertPoints{

CollectionName: "{collection_name}",

Points: []*qdrant.PointStruct{

{

Id: qdrant.NewIDNum(1),

Vectors: qdrant.NewVectors(0.9, 0.1, 0.1),

Payload: qdrant.NewValueMap(map[string]any{"group_id": "user_1"}),

}

},

ShardKeySelector: &qdrant.ShardKeySelector{

ShardKeys: []*qdrant.ShardKey{

qdrant.NewShardKey("user_1"),

},

Fallback: qdrant.NewShardKey("default"),

},

})

路由逻辑将按如下方式工作

- 如果

target分片存在且处于活动状态,请求将被路由到它。 - 如果

target分片不存在,请求将被路由到fallback分片。

同样,在查询点时,我们需要指定分片键选择器并通过 group_id 进行过滤。请注意,过滤器匹配值应始终与 target 分片键匹配。

将租户升级到专用分片

当租户足够大时,你可以将其升级到其自己的专用分片。为此,你需要首先为租户创建一个新分片

PUT /collections/{collection_name}/shards

{

"shard_key": "user_1",

"initial_state": "Partial"

}

from qdrant_client import QdrantClient, models

client = QdrantClient(url="https://:6333")

client.create_shard_key(

"{collection_name}",

shard_key="user_1",

initial_state=models.ReplicaState.PARTIAL

)

import { QdrantClient } from "@qdrant/js-client-rest";

const client = new QdrantClient({ host: "localhost", port: 6333 });

client.createShardKey("{collection_name}", {

shard_key: "default",

initial_state: "Partial"

});

use qdrant_client::qdrant::{

CreateShardKeyBuilder, CreateShardKeyRequestBuilder

};

use qdrant_client::qdrant::ReplicaState;

use qdrant_client::Qdrant;

let client = Qdrant::from_url("https://:6334").build()?;

client

.create_shard_key(

CreateShardKeyRequestBuilder::new("{collection_name}")

.request(

CreateShardKeyBuilder::default()

.shard_key("user_1".to_string())

.initial_state(ReplicaState::Partial)

),

)

.await?;

import static io.qdrant.client.ShardKeyFactory.shardKey;

import io.qdrant.client.QdrantClient;

import io.qdrant.client.QdrantGrpcClient;

import io.qdrant.client.grpc.Collections.CreateShardKey;

import io.qdrant.client.grpc.Collections.CreateShardKeyRequest;

import io.qdrant.client.grpc.Collections.ReplicaState;

QdrantClient client =

new QdrantClient(QdrantGrpcClient.newBuilder("localhost", 6334, false).build());

client.createShardKeyAsync(CreateShardKeyRequest.newBuilder()

.setCollectionName("{collection_name}")

.setRequest(CreateShardKey.newBuilder()

.setShardKey(shardKey("default"))

.setInitialState(ReplicaState.PARTIAL)

.build())

.build()).get();

using Qdrant.Client;

using Qdrant.Client.Grpc;

var client = new QdrantClient("localhost", 6334);

await client.CreateShardKeyAsync(

"{collection_name}",

new CreateShardKey {

ShardKey = new ShardKey { Keyword = "default" },

InitialState = ReplicaState.Partial

}

);

import (

"context"

"github.com/qdrant/go-client/qdrant"

)

client, err := qdrant.NewClient(&qdrant.Config{

Host: "localhost",

Port: 6334,

})

client.CreateShardKey(

context.Background(),

"{collection_name}",

&qdrant.CreateShardKey{

ShardKey: qdrant.NewShardKey("default"),

InitialState: qdrant.ReplicaState_PARTIAL,

}

)

请注意,我们创建处于 Partial 状态的分片,因为它仍然需要将数据传输到其中。

要启动数据传输,还有另一个 API 方法称为 replicate_points

POST /collections/{collection_name}/cluster

{

"replicate_points": {

"filter": {

"must": {

"key": "group_id",

"match": {

"value": "user_1"

}

}

},

"from_shard_key": "default",

"to_shard_key": "user_1"

}

}

from qdrant_client import QdrantClient, models

client = QdrantClient(url="https://:6333")

client.cluster_collection_update(

collection_name="{collection_name}",

cluster_operation=models.ReplicatePointsOperation(

replicate_points=models.ReplicatePoints(

from_shard_key="default",

to_shard_key="user_1",

filter=models.Filter(

must=[

models.FieldCondition(

key="group_id",

match=models.MatchValue(

value="user_1",

)

)

]

)

)

)

)

import { QdrantClient } from "@qdrant/js-client-rest";

const client = new QdrantClient({ host: "localhost", port: 6333 });

client.updateCollectionCluster("{collection_name}", {

replicate_points: {

filter: {

must: {

key: "group_id",

match: {

value: "user_1"

}

}

},

from_shard_key: "default",

to_shard_key: "user_1"

}

});

use qdrant_client::qdrant::{

update_collection_cluster_setup_request::Operation, Condition, Filter,

ReplicatePointsBuilder, ShardKey, UpdateCollectionClusterSetupRequest,

};

use qdrant_client::Qdrant;

let client = Qdrant::from_url("https://:6334").build()?;

client

.update_collection_cluster_setup(UpdateCollectionClusterSetupRequest {

collection_name: "{collection_name}".to_string(),

operation: Some(Operation::ReplicatePoints(

ReplicatePointsBuilder::new(

ShardKey::from("default"),

ShardKey::from("user_1"),

)

.filter(Filter::must([Condition::matches(

"group_id",

"user_1".to_string(),

)]))

.build(),

)),

timeout: None,

})

.await?;

import static io.qdrant.client.ConditionFactory.matchKeyword;

import static io.qdrant.client.QueryFactory.nearest;

import static io.qdrant.client.ShardKeyFactory.shardKey;

import io.qdrant.client.grpc.Collections.ReplicatePoints;

import io.qdrant.client.grpc.Collections.UpdateCollectionClusterSetupRequest;

import io.qdrant.client.grpc.Points.Filter;

import io.qdrant.client.QdrantClient;

import io.qdrant.client.QdrantGrpcClient;

QdrantClient client =

new QdrantClient(QdrantGrpcClient.newBuilder("localhost", 6334, false).build());

client

.updateCollectionClusterSetupAsync(

UpdateCollectionClusterSetupRequest.newBuilder()

.setCollectionName("{collection_name}")

.setReplicatePoints(

ReplicatePoints.newBuilder()

.setFromShardKey(shardKey("default"))

.setToShardKey(shardKey("user_1"))

.setFilter(

Filter.newBuilder().addMust(matchKeyword("group_id", "user_1")).build())

.build())

.build())

.get();

using Qdrant.Client;

using Qdrant.Client.Grpc;

using static Qdrant.Client.Grpc.Conditions;

var client = new QdrantClient("localhost", 6334);

await client.UpdateCollectionClusterSetupAsync(new()

{

CollectionName = "{collection_name}",

ReplicatePoints = new()

{

FromShardKey = "default",

ToShardKey = "user_1",

Filter = MatchKeyword("group_id", "user_1")

}

});

import (

"context"

"github.com/qdrant/go-client/qdrant"

)

client, err := qdrant.NewClient(&qdrant.Config{

Host: "localhost",

Port: 6334,

})

client.UpdateClusterCollectionSetup(ctx, qdrant.NewUpdateCollectionClusterReplicatePoints(

"{collection_name}", &qdrant.ReplicatePoints{

FromShardKey: qdrant.NewShardKey("default"),

ToShardKey: qdrant.NewShardKey("user_1"),

Filter: &qdrant.Filter{

Must: []*qdrant.Condition{

qdrant.NewMatch("group_id", "user_1"),

},

},

},

))

一旦传输完成,目标分片将变为 Active,并且租户的所有请求都将自动路由到它。此时,可以安全地从共享回退分片中删除租户的数据以释放空间。

限制

- 目前,

fallback分片本身只能包含单个分片 ID。这意味着所有小租户必须适合集群的单个对等体。此限制将在未来版本中改进。 - 与集合类似,专用分片会引入一些资源开销。不建议每个集群创建超过一千个专用分片。建议的租户升级阈值与单个集合的索引阈值相同,约为 2 万个点。